Frequently Asked Questions

Account

Can I create multiple Galaxy accounts?

- You ARE NOT allowed to create more than 1 account per Galaxy server.

- You ARE allowed to have accounts on different servers.

For example, you are allowed to have 1 account on Galaxy US, and another account on Galaxy EU, but never 2 accounts on the same Galaxy.

WARNING: Having multiple accounts is a violation of the terms of service, and may result in deletion of your accounts.

Need more disk space?

- See this tip for ways to free up space in your account

- Contact the admins of your Galaxy server to ask about possibilities for temporarily increasing your quota.

Other tips:

- Forgot your password? You can request a reset link in on the login page.

- If you want to associate your account with a different email address, you can do so under User -> Preferences in the top menu bar

- To start over with a new account, first delete your account(s) first before creating a new account. This can be done in User -> Preferences menu in the top bar.

Changing acount email or password

- Make sure you are logged in to Galaxy.

- Go to User > Preferences in the top menu bar.

- To change email and public name, click on Manage Information and to change password, click on Change Password.

- Make the changes and click on the Save button at the bottom.

- To change email successfully, verify your account by email through the activation link sent by Galaxy.

Note: Don’t open another account if your email changes, update the existing account email instead. Creating a new account will be detected as a duplicate and will get your account disabled and deleted.

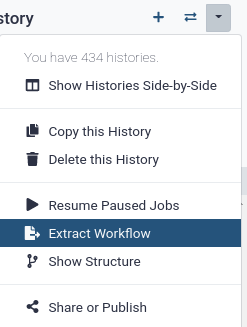

How can I reduce quota usage while still retaining prior work (data, tools, methods)?

- Download Datasets as individual files or entire Histories as an archive. Then purge them from the public server.

- Transfer/Move Datasets or Histories to another Galaxy server, including your own Galaxy. Then purge.

- Copy your most important Datasets into a new/other History (inputs, results), then purge the original full History.

- Extract a Workflow from the History, then purge it.

- Back-up your work. It is a best practice to download an archive of your FULL original Histories periodically, even those still in use, as a backup.

Resources Much discussion about all of the above options can be found at the Galaxy Help forum.

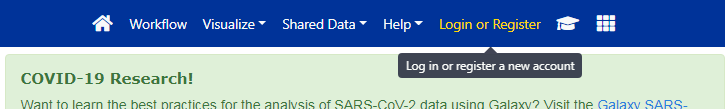

How do I create an account on a public Galaxy instance?

To create an account at any public Galaxy instance, choose your server from the available list of Galaxy Platform.

There are 3 main public Galaxy servers: UseGalaxy.org, UseGalaxy.eu, and UseGalaxy.org.au.

Click on “Login or Register” in the masthead on the server.

- Click on Register here and fill in the required information.

- Click on the Create button, your account is successfully created.

- Check for a Confirmation Email in the email you used for account creation.

- Click on the Email confirmation link to fully activate your account.

How to update account preferences?

- Log in to Galaxy

- Navigate to User > Preferences on the top menu bar.

- Here you can update various preferences, such as:

- pref-info Manage Information (edit your email addresses, custom parameters, or change your public name)

- pref-password Change Password

- pref-identities Manage Third-Party Identities (connect or disconnect access to your third-party identities)

- pref-permissions Set Dataset Permissions for New Histories (grant others default access to newly created histories. Changes made here will only affect histories created after these settings have been stored.

- pref-dataprivate Make All Data Private

- pref-apikey Manage API Key (access your current API key or create a new one)

- pref-cloud Manage Cloud Authorization (add or modify the configuration that grants Galaxy to access your cloud-based resources)

- pref-toolboxfilters Manage Toolbox Filters (customize your Toolbox by displaying or omitting sets of Tools)

- pref-custombuilds Manage Custom Builds (add or remove custom builds using history datasets)

- pref-signout Sign out of Galaxy (signs you out of all sessions)

- pref-notifications Enable notifications (allow push and tab notifcations on job completion)

- pref-delete Delete Account (on this Galaxy server)

Analysis

Adding a custom database/build (dbkey)

Galaxy may have several reference genomes built-in, but you can also create your own.

- In the top menu bar, go to the User, and select Custom Builds

- Choose a name for your reference build

- Choose a dbkey for your reference build

- Under Definition, select the option

FASTA-file from history- Under FASTA-file, select your fasta file

- Click the Save button

Beware of Cuts

Galaxy has several different cut toolsWarning: Beware of CutsThe section below uses Cut tool. There are two cut tools in Galaxy due to historical reasons. This example uses tool with the full name Cut columns from a table (cut). However, the same logic applies to the other tool. It simply has a slightly different interface.

Extended Help for Differential Expression Analysis Tools

The error and usage help in this FAQ applies to:

- Deseq2

- Limma

- edgeR

- goseq

- DEXSeq

- Diffbind

- StringTie

- Featurecounts

- HTSeq

- Kalisto

- Salmon

- Sailfish

- DexSeq-count

Expect odd errors or content problems if any of the usage requirements below are not met:

- Differential expression tools all require count dataset replicates when used in Galaxy. At least two per factor level and the same number per factor level. These must all contain unique content.

- Factor/Factor level names should only contain alphanumeric characters and optionally underscores. Avoid starting these with a number and do not include spaces.

- If the tool uses

Conditions, the same naming requirements apply.DEXSeqadditionally requires that the first Condition is labeled asCondition.- Reference annotation should be in GTF format for most of these tools, with no header/comment lines. Remove all GTF header lines with the tool Remove beginning of a file. If any are comment lines are internal to the file, those should be removed. The tool Select can be used.

- Make sure that if a GTF dataset is used, and tool form settings are expecting particular attributes, those are actually in your annotation file (example: gene_id).

- GFF3 data (when accepted by a tool) should have single

#comment line and any others (at the start or internal) that usually start with a##should be removed. The tool Select can be used.- If a GTF dataset is not available for your genome, a two-column tabular dataset containing

transcript <tab> genecan be used instead with most of these tools. Some reformatting of a different annotation file type might be needed. Tools in the groups under GENERAL TEXT TOOLS can be used.- Make sure that if your count inputs have a header, the option Files have header? is set to Yes. If no header, set to No.

- Custom genomes/transcriptomes/exomes must be formatted correctly before mapping.

- Any reference annotation should be an exact match for any genome/transcriptome/exome used for mapping. Build and version matter.

- Avoid using UCSC’s annotation extracted from their Table Browser. All GTF datasets from the UCSC Table Browser have the same content populated for the transcript_id and gene_id values. Both are the “transcript_id”, which creates scientific content problems, effectively meaning that the counts will be summarized “by transcript” and not “by gene”, even if labeled in a tool’s output as being “by gene”. It is usually possible to extract gene/transcript in tabular format from other related tables. Review the Table Browser usage at UCSC for how to link/extract data or ask them for guidance if you need extra help to get this information for a specific data track.

Note: Selected genomes at UCSC do have a reference anotatation GTF pre-computed and available with a Gene Symbol populated into the “gene_id” value. Find these in the UCSC “Downloads” area. When available, the link can be directly copy/pasted into the Upload tool in Galaxy. Allow Galaxy to autodetect the datatype to produce an uncompressed GTF dataset in your history ready to use with tools.

My jobs aren't running!

Please make sure you are logged in. At the top menu bar, you should see a section labeled “User”. If you see “Login/Register” here you are not logged in.

- Activate your account. If you have recently registered your account, you may first have to activate it. You will receive an e-mail with an activation link.

- Make sure to check your spam folder!

Be patient. Galaxy is a free service, when a lot of people are using it, you may have to wait longer than usual (especially for ‘big’ jobs, e.g. alignments).

- Contact Support. If you really think something is wrong with the server, you can ask for support

Reporting usage problems, security issues, and bugs

- For reporting Usage Problems, related to tools and functions, head to the Galaxy Help site.

- Red Error Datasets:

- Refer to the Troubleshooting errors FAQ for red error in datasets.

- Unexpected results in Green Success Dataset:

- To resolve it you may be asked to send in a shared history link and possibly a shared workflow link. For sharing your history, refer to this link.

- To reach our support team, visit Support FAQs.

- Functionality problems:

- Using Galaxy Help is the best way to get help in most cases.

- If the problem is more complex, email a description of the problem and how to reproduce it.

- Administrative problems:

- If the problem is present in your own Galaxy, the administrative configuration may be a factor.

- For the fastest help directly from the development community, admin issues can be alternatively reported to the mailing list or the GalaxyProject Gitter channel.

- For Security Issues, do not report them via GitHub. Kindly disclose these as explained in this document.

- For Bug Reporting, create a Github issue. Include the steps mentioned here.

- Search the GTN Search to find prior Q & A, FAQs, tutorials, and other documentation across all Galaxy resources, to verify in case your issue was already faced by someone.

Results may vary

Comment: Results may varyYour results may be slightly different from the ones presented in this tutorial due to differing versions of tools, reference data, external databases, or because of stochastic processes in the algorithms.

Troubleshooting errors

When you get a red dataset in your history, it means something went wrong. But how can you find out what it was? And how can you report errors?When something goes wrong in Galaxy, there are a number of things you can do to find out what it was. Error messages can help you figure out whether it was a problem with one of the settings of the tool, or with the input data, or maybe there is a bug in the tool itself and the problem should be reported. Below are the steps you can follow to troubleshoot your Galaxy errors.

- Expand the red history dataset by clicking on it.

- Sometimes you can already see an error message here

View the error message by clicking on the bug icon galaxy-bug

- Check the logs. Output (stdout) and error logs (stderr) of the tool are available:

- Expand the history item

- Click on the details icon

- Scroll down to the Job Information section to view the 2 logs:

- Tool Standard Output

- Tool Standard Error

- For more information about specific tool errors, please see the Troubleshooting section

- Submit a bug report! If you are still unsure what the problem is.

- Click on the bug icon galaxy-bug

- Write down any information you think might help solve the problem

- See this FAQ on how to write good bug reports

- Click galaxy-bug Report button

- Ask for help!

- Where?

- In the GTN Gitter Channel

- In the Galaxy Gitter Channel

- Browse the Galaxy Help Forum to see if others have encountered the same problem before (or post your question).

- When asking for help, it is useful to share a link to your history

Will my jobs keep running?

Galaxy is a fantastic system, but some users find themselves wondering:

Will my jobs keep running once I’ve closed the tab? Do I need to keep my browser open?

No, you don’t! You can safely:

- Start jobs

- Shut down your computer

and your jobs will keep running in the background! Whenever you next visit Galaxy, you can check if your jobs are still running or completed.

However, this is not true for uploading data from your computer. You must wait for uploading a dataset from your computer to finish. (Uploading via URL is not affected by this, if you’re uploading from URL you can close your computer.)

Collections

Adding a tag to a collection

- Click on the collection

Add a tag starting with

#in theAdd tagsfieldTags starting with

#will be automatically propagated to the outputs of tools using this dataset.- Press Enter

- Check that the tag is appearing below the collection name

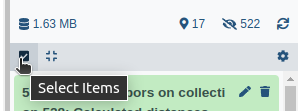

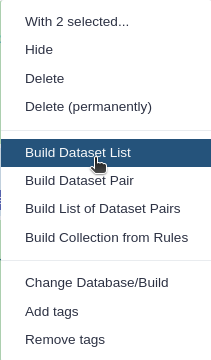

Creating a dataset collection

- Click on Operations on multiple datasets (check box icon) at the top of the history panel

- Check all the datasets in your history you would like to include

Click For all selected.. and choose Build dataset list

- Enter a name for your collection

- Click Create List to build your collection

- Click on the checkmark icon at the top of your history again

Creating a paired collection

- Click on Operations on multiple datasets (check box icon) at the top of the history panel

- Check all the datasets in your history you would like to include

Click For all selected.. and choose Build List of Dataset Pairs

- Change the text of unpaired forward to a common selector for the forward reads

- Change the text of unpaired reverse to a common selector for the reverse reads

- Click Pair these datasets for each valid forward and reverse pair.

- Enter a name for your collection

- Click Create List to build your collection

- Click on the checkmark icon at the top of your history again

Renaming a collection

- Click on the collection

- Click on the name of the collection at the top

- Change the name

- Press Enter

Data upload

Data retrieval with “NCBI SRA Tools” (fastq-dump)

This section will guide you through downloading experimental metadata, organizing the metadata to short lists corresponding to conditions and replicates, and finally importing the data from NCBI SRA in collections reflecting the experimental design.

Downloading metadata

- It is critical to understand the condition/replicate structure of an experiment before working with the data so that it can be imported as collections ready for analysis. Direct your browser to SRA Run Selector and in the search box enter GEO data set identifier (for example: GSE72018). Once the study appears, click the box to download the “RunInfo Table”.

Organizing metadata

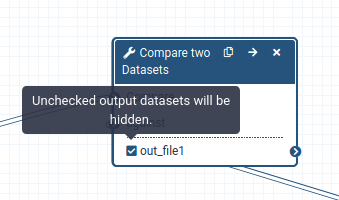

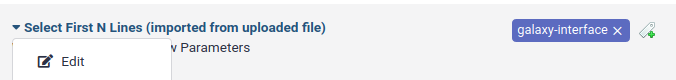

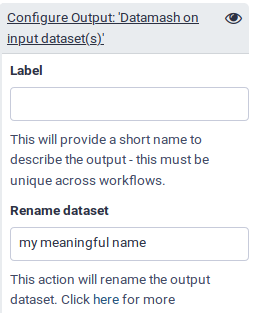

- The “RunInfo Table” provides the experimental condition and replicate structure of all of the samples. Prior to importing the data, we need to parse this file into individual files that contain the sample IDs of the replicates in each condition. This can be achieved by using a combination of the ‘group’, ‘compare two datasets’, ‘filter’, and ‘cut’ tools to end up with single column lists of sample IDs (SRRxxxxx) corresponding to each condition.

Importing data

- Provide the files with SRR IDs to NCBI SRA Tools (fastq-dump) to import the data from SRA to Galaxy. By organizing the replicates of each condition in separate lists, the data will be imported as “collections” that can be directly loaded to a workflow or analysis pipeline.

Directly obtaining UCSC sourced *genome* identifiers

Option 1

- Go to UCSC Genome Browser, navigate to “genomes”, then the species of interest.

- On the home page for the genome build, immediately under the top navigation box, in the blue bar next to the full genome build name, you will find View sequences button.

- Click on the View sequences button and it will take you to a detail page with a table listing out the contents.

Option 2

- Use the tool Get Data -> UCSC Main.

- In the Table Browser, choose the target genome and build.

- For “group” choose the last option “All Tables”.

- For “table” choose “chromInfo”.

- Leave all other options at default and send the output to Galaxy.

- This new dataset will load as a tabular dataset into your history.

- It will list out the contents of the genome build, including the chromosome identifiers (in the first column).

How can I upload data using EBI-SRA?

- Search for your data directly in the tool and use the Galaxy links.

- Be sure to check your sequence data for correct quality score formats and the metadata “datatype” assignment.

Importing data from a data library

As an alternative to uploading the data from a URL or your computer, the files may also have been made available from a shared data library:

- Go into Shared data (top panel) then Data libraries

- Navigate to the correct folder as indicated by your instructor

- Select the desired files

- Click on the To History button near the top and select as Datasets from the dropdown menu

- In the pop-up window, select the history you want to import the files to (or create a new one)

- Click on Import

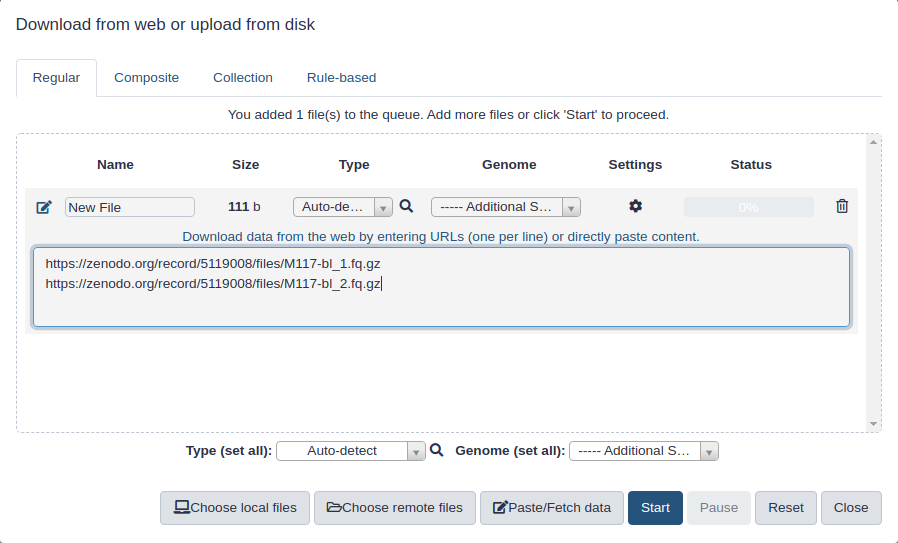

Importing via links

- Copy the link location

Open the Galaxy Upload Manager (galaxy-upload on the top-right of the tool panel)

- Select Paste/Fetch Data

Paste the link into the text field

Press Start

- Close the window

NCBI SRA sourced fastq data

In these FASTQ data:

- The quality score identifier (+) is sometimes not a match for the sequence identifier (@).

- The forward and reverse reads may be interlaced and need to be separated into distinct datasets.

- Both may be present in a dataset. Correct the first, then the second, as explained below.

- Format problems of any kind can cause tool failures and/or unexpected results.

- Fix the problems before running any other tools (including FastQC, Fastq Groomer, or other QA tools)

For inconsistent sequence (@) and quality (+) identifiers

Correct the format by running the tool Replace Text in entire line with these options:

- Find pattern:

^\+SRR.+- Replace with:

+Note: If the quality score line is named like “+ERR” instead (or other valid options), modify the pattern search to match.

For interlaced forward and reverse reads

Solution 1 (reads named /1 and /2)

- Use the tool FASTQ de-interlacer on paired end reads

Solution 2 (reads named /1 and /2)

- Create distinct datasets from an interlaced fastq dataset by running the tool Manipulate FASTQ reads on various attributes on the original dataset. It will run twice.

Note: The solution does NOT use the FASTQ Splitter tool. The data to be manipulated are interlaced sequences. This is different in format from data that are joined into a single sequence.

Use the Manipulate FASTQ settings to produce a dataset that contains the

/1reads**Match Reads

- Match Reads by

Name/Identifier- Identifier Match Type

Regular Expression- Match by

.+/2Manipulate Reads

- Manipulate Reads by

Miscellaneous Actions- Miscellaneous Manipulation Type

Remove ReadUse these Manipulate FASTQ settings to produce a dataset that contains the

/2reads**

- Exact same settings as above except for this change: Match by

.+/1Solution 3 (reads named /1 and /3)

- Use the same operations as in Solution 2 above, except change the first Manipulate FASTQ query term to be:

- Match by

.+/3Solution 4 (reads named without /N)

- If your data has differently formatted sequence identifiers, the “Match by” expression from Solution 2 above can be modified to suit your identifiers.

Alternative identifiers such as:

@M00946:180:000000000-ANFB2:1:1107:14919:14410 1:N:0:1

@M00946:180:000000000-ANFB2:1:1107:14919:14410 2:N:0:1

Upload fastqsanger datasets via links

Click on Upload Data on the top of the left panel:

Click on Paste/Fetch:

Paste URL into text box that would appear:

Set Type (set all) to

fastqsangeror, if your data is compressed as in URLs above (they have.gzextensions), tofastqsanger.gz

:

Upload few files (1-10)

- Click on Upload Data on the top of the left panel

- Click on Choose local file and select the files or drop the files in the Drop files here part

- Click on Start

- Click on Close

Upload many files (>10) via FTP

Make sure to have an FTP client installed

There are many options. We can recommend FileZilla, a free FTP client that is available on Windows, MacOS, and Linux.

- Establish FTP connection to the Galaxy server

- Provide the Galaxy server’s FTP server name (e.g.

usegalaxy.org,ftp.usegalaxy.eu)- Provide the username (usually the email address) and the password on the Galaxy server

- Connect

Add the files to the FTP server by dragging/dropping them or right clicking on them and uploading them

The FTP transfer will start. We need to wait until they are done.

- Open the Upload menu on the Galaxy server

- Click on Choose FTP file on the bottom

- Select files to import into the history

- Click on Start

Datasets

Adding a tag

Tags can help you to better organize your history and track datasets.

- Click on the dataset

- Click on galaxy-tags Edit dataset tags

Add a tag starting with

#Tags starting with

#will be automatically propagated to the outputs of tools using this dataset.- Check that the tag is appearing below the dataset name

Changing database/build (dbkey)

You can tell Galaxy which dbkey (e.g. reference genome) your dataset is associated with. This may be used by tools to automatically use the correct settings.

- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, change the Database/Build field

- Select your desired database key from the dropdown list

- Click the Save button

Changing the datatype

Galaxy will try to autodetect the datatype of your files, but you may need to manually set this occasionally.

- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, click on the galaxy-chart-select-data Datatypes tab on the top

- Select your desired datatype

- tip: you can start typing the datatype into the field to filter the dropdown menu

- Click the Save button

Converting the file format

Some datasets can be transformed into a different format. Galaxy has some built-in file conversion options depending on the type of data you have.

- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, click on the galaxy-gear Convert tab on the top

- Select the appropriate datatype from the list

- Click the Create dataset button to start the conversion.

Creating a new file

Galaxy allows you to create new files from the upload menu. You can supply the contents of the file.

- Open the Galaxy Upload Manager

- Select Paste/Fetch Data

Paste the file contents into the text field

- Press Start and Close the window

Datasets not downloading at all

- Check to see if pop-ups are blocked by your web browser. Where to check can vary by browser and extensions.

- Double check your API key, if used. Go to User > Preferences > Manage API key.

- Check the sharing/permission status of the Datasets. Go to Dataset > Pencil icon galaxy-pencil > Edit attributes > Permissions. If you do not see a “Permissions” tab, then you are not the owner of the data.

Notes:

- If the data was shared with you by someone else from a Shared History, or was copied from a Published History, be aware that there are multiple levels of data sharing permissions.

- All data are set to not shared by default.

- Datasets sharing permissions for a new history can be set before creating a new history. Go to User > Preferences > Set Dataset Permissions for New Histories.

- User > Preferences > Make all data private is a “one click” option to unshare ALL data (Datasets, Histories). Note that once confirmed and all data is unshared, the action cannot be “undone” in batch, even by an administrator. You will need to re-share data again and/or reset your global sharing preferences as wanted.

- Only the data owner has control over sharing/permissions.

- Any data you upload or create yourself is automatically owned by you with full access.

- You may not have been granted full access if the data were shared or imported, and someone else is the data owner (your copy could be “view only”).

- After you have a fully shared copy of any shared/published data from someone else, then you become the owner of that data copy. If the other person or you make changes, it applies to each person’s copy of the data, individually and only.

- Histories can be shared with included Datasets. Datasets can be downloaded/manipulated by others or viewed by others.

- Share access to Datasets is distinct but it relates to Histories’ access.

Detecting the datatype (file format)

- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, click on the galaxy-chart-select-data Datatypes tab on the top

- Click the Auto-detect button to have Galaxy try to autodetect it.

Different dataset icons and their usage

Icons provide a visual experience for objects, actions, and ideasDataset icons and their usage:

- galaxy-eye “Eye icon”: Display data of the job in the browser.

- galaxy-pencil “Pencil icon”: Edit attributes of the job.

- galaxy-cross “‘X’ icon”: Delete the job.

- galaxy-info “Info icon”: Job details and run information.

- galaxy-refresh “Refresh/Rerun icon”: Run this (selected) job again or examine original submitted form (filled in).

- galaxy-bug “Bug icon”: Review and optionally submit a bug report.

Downloading datasets

- Click on the dataset in your history to expand it

- Click on the Download icon galaxy-save to save the dataset to your computer.

Downloading datasets using command line

From the terminal window on your computer, you can use wget or curl.

- Make sure you have wget or curl installed.

- Click on the Dataset name, then click on the copy link icon galaxy-link. This is the direct-downloadable dataset link.

- Once you have the link, use any of the following commands:

- For wget

wget '<link>'wget -O '<link>'wget -O --no-check-certificate '<link>' # ignore SSL certificate warningswget -c '<link>' # continue an interrupted download- For curl

curl -o outfile '<link>'curl -o outfile --insecure '<link>' # ignore SSL certificate warningscurl -C - -o outfile '<link>' # continue an interrupted download- For dataset collections and datasets within collections you have to supply your API key with the request

- Sample commands for wget and curl respectively are:

wget https://usegalaxy.org/api/dataset_collections/d20ad3e1ccd4595de/download?key=MYSECRETAPIKEY

curl -o myfile.txt https://usegalaxy.org/api/dataset_collections/d20ad3e1ccd4595de/download?key=MYSECRETAPIKEY

Finding BAM dataset identifiers

Quickly learn what the identifiers are in any **BAM** dataset that is the result from mapping

- Run Samtools: IdxStats on the aligned data (

bamdataset).- The “index header” chromosome names and lengths will be listed in the output (along with read counts).

- Compare the chromosome identifiers to the chromosome (aka “chrom”) field in all other inputs: VCF, GTF, GFF(3), BED, Interval, etc.

Note:

- The original mapping target may have been a built-in genome index, custom genome (transcriptome, exome, other) – the same

bamdata will still be summarized.- This method will not work for “sequence-only”

bamdatasets, as these usually have no header.

Finding Datasets

- To review all active Datasets in your account, go to User > Datasets.

Notes:

- Logging out of Galaxy while the Upload tool is still loading data can cause uploads to abort. This is most likely to occur when a dataset is loaded by browsing local files.

- If you have more than one browser window open, each with a different Galaxy History loaded, the Upload tool will load data into the most recently used history.

- Click on refresh icon galaxy-refresh at the top of the History panel to display the current active History with the datasets.

How to unhide "hidden datasets"?

If you have run a workflow with hidden datasets, in your History:

- Click the gear icon galaxy-gear → Click Unhide Hidden Datasets

- Or use the toggle

hiddento view themWhen using the Copy Datasets feature, hidden datasets will not be available to transfer from the Source History list of datasets. To include them:

- Click the gear icon galaxy-gear → Click Unhide Hidden Datasets

- Click the gear icon galaxy-gear → Click Copy Datasets

Mismatched Chromosome identifiers and how to avoid them

The methods listed here help to identify and correct errors or unexpected results linked to inputs having non-identical chromosome identifiers and/or different chromosome sequence content.

If using a Custom Reference genome, the methods below also apply, but the first step is to make certain that the Custom Genome is formatted correctly. Improper formatting is the most common root cause of CG related errors.

Method 1: Finding BAM dataset identifiers

Method 2: Directly obtaining UCSC sourced genome identifiers

Method 3: Adjusting identifiers for UCSC sourced data used with other sourced data

Method 4: Adjusting identifiers or input source for any mixed sourced data

A Note on Built-in Reference Genomes

- The default variant for all genomes is “Full”, defined as all primary chromosomes (or scaffolds/contigs) including mitochondrial plus associated unmapped, plasmid, and other segments.

- When only one version of a genome is available for a tool, it represents the default “Full” variant.

Some genomes will have more than one variant available.

- The “Canonical Male” or sometimes simply “Canonical” variant contains the primary chromosomes for a genome. For example a human “Canonical” variant contains chr1-chr22, chrX, chrY, and chrM.

- The “Canonical Female” variant contains the primary chromosomes excluding chrY.

Moving datasets between Galaxy servers

On the origin Galaxy server:

- Click on the name of the dataset to expand the info.

- Click on the Copy link icon galaxy-link.

On the destination Galaxy server:

- Click on Upload data > Paste / Fetch Data and paste the link. Select attributes, such as genome assembly, if required. Hit the Start button.

Note: The copy link icon galaxy-link cannot be used to move HTML datasets (but this can be downloaded using the download button galaxy-save) and SQLite datasets.

Purging datasets

- All account Datasets can be reviewed under User > Datasets.

- To permanently delete: use the link from within the dataset, or use the Operations on Multiple Datasets functions, or use the Purge Deleted Datasets option in the History menu.

Notes:

- Within a History, deleted/permanently deleted Datasets can be reviewed by toggling the deleted link at the top of the History panel, found immediately under the History name.

- Both active (shown by default) and hidden (the other toggle link, next to the deleted link) datasets can be reviewed the same way.

- Click on the far right “X” to delete a dataset.

- Datasets in a deleted state are still part of your quota usage.

- Datasets must be purged (permanently deleted) to not count toward quota.

Quotas for datasets and histories

- Deleted datasets and deleted histories containing datasets are considered when calculating quotas.

- Permanently deleted datasets and permanently deleted histories containing datasets are not considered.

- Histories/datasets that are shared with you are only partially considered unless you import them.

Note: To reduce quota usage, refer to How can I reduce quota usage while still retaining prior work (data, tools, methods)? FAQ.

Renaming a dataset

- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, change the Name field

- Click the Save button

Understanding job statuses

Job statuses will help you understand the stages of your work.The following job statuses will help you better understand the working stage of the process.

- Green: The job was completed successfully.

- Yellow: The job is executing. Allow this to complete! Should they run longer, they will fail with a “wall-time” error and turn red.

- Grey: The job is being evaluated to run (new dataset) or is queued. Allow this to complete.

- Red: The job has failed.

- Light Blue: The job is paused. This indicates either an input has a problem or that you have exceeded the disk quota set by the administrator of the Galaxy instance you are working on.

- Grey, Yellow, Grey again: The job is waiting to run due to admin re-run or an automatic fail-over to a longer-running cluster.

- Bright blue with moving arrow: May be found in earlier Galaxy versions. Applies to the “Get Data → Upload File” tool only - the upload job is queuing or running.

It is essential to allow queued jobs to remain queued and not delete/re-run them.

Working with GFF GFT GTF2 GFF3 reference annotation

- All annotation datatypes have a distinct format and content specification.

- Data providers may release variations of any, and tools may produce variations.

- GFF3 data may be labeled as GFF.

- Content can overlap but is generally not understood by tools that are expecting just one of these specific formats.

- Best practices

- The sequence identifiers must exactly match between reference annotation and reference genomes transcriptomes exomes.

- Most tools expect GFT format unless the tool form specifically notes otherwise.

- Get the GTF version from the data providers if it is available.

- If only GFF3 is available, you can attempt to transform it with the tool gffread.

- Was GTF data detected as GFF during Upload? It probably has headers. -Remove the headers (lines that start with a “#”) with the Select tool using the option “NOT Matching” with the regular expression: ^#

- Redetect the datatype. It should be GTF once corrected.

- UCSC annotation

- Find annotation under their Downloads area. The path will be similar to:

https://hgdownload.soe.ucsc.edu/goldenPath/<database>/bigZips/genes/- Copy the URL from UCSC and paste it into the Upload tool, allowing Galaxy to detect the datatype.

Working with deleted datasets

Deleted datasets and histories can be recovered by users as they are retained in Galaxy for a time period set by the instance administrator. Deleted datasets can be undeleted or permanently deleted within a History. Links to show/hide deleted (and hidden) datasets are at the top of the History panel.

- To review or adjust an individual dataset:

- Click on the name to expand it.

- If it is only deleted, but not permanently deleted, you’ll see a message with links to recover or to purge.

- Click on Undelete it to recover the dataset, making it active and accessible to tools again.

- Click on Permanently remove it from disk to purge the dataset and remove it from the account quota calculation.

- To review or adjust multiple datasets in batch:

- Click on the checked box icon galaxy-selector near the top right of the history panel to switch into “Operations on Multiple Datasets” mode.

- Accordingly for each individual dataset, choose the selection box. Check the datasets you want to modify and choose your option (show, hide, delete, undelete, purge, and group datasets).

Working with very large fasta datasets

- Run FastQC on your data to make sure the format/content is what you expect. Run more QA as needed.

- Search GTN tutorials with the keyword “qa-qc” for examples.

- Search Galaxy Help with the keywords “qa-qc” and “fasta” for more help.

- Assembly result?

- Consider filtering by length to remove reads that did not assemble.

- Formatting criteria:

- All sequence identifiers must be unique.

- Some tools will require that there is no description line content, only identifiers, in the fasta title line (“>” line). Use NormalizeFasta to remove the description (all content after the first whitespace) and wrap the sequences to 80 bases.

- Custom genome, transcriptome exome?

- Only appropriate for smaller genomes (bacterial, viral, most insects).

- Not appropriate for any mammalian genomes, or some plants/fungi.

- Sequence identifiers must be an exact match with all other inputs or expect problems. See GFF GFT GFF3.

- Formatting criteria:

- All sequence identifiers must be unique.

- ALL tools will require that there is no description content, only identifiers, in the fasta title line (“>” line). Use NormalizeFasta to remove the description (all content after the first whitespace) and wrap the sequences to 80 bases.

- The only exception is when executing the MakeBLASTdb tool and when the input fasta is in NCBI BLAST format (see the tool form).

Working with very large fastq datasets

- Run FastQC on your data to make sure the format/content is what you expect. Run more QA as needed.

- Search GTN tutorials with the keyword “qa-qc” for examples.

- Search Galaxy Help with the keywords “qa-qc” and “fastq” for more help.

- How to create a single smaller input. Search the tool panel with the keyword “subsample” for tool choices.

- How to create multiple smaller inputs. Start with Split file to dataset collection, then merge the results back together using a tool specific for the datatype. Example: BAM results? Use MergeSamFiles.

Datatypes

Best practices for loading fastq data into Galaxy

- As of release

17.09,fastqdata will have the datatypefastqsangerauto-detected when that quality score scaling is detected and “autodetect” is used within the Upload tool. Compressedfastqdata will be converted to uncompressed in the history.- To preserve

fastqcompression, directly assign the appropriate datatype (eg:fastqsanger.gz).- If the data is close to or over 2 GB in size, be sure to use FTP.

- If the data was already loaded as

fastq.gz, don’t worry! Just test the data for correct format (as needed) and assign the metadata type.

Compressed FASTQ files, (`*.gz`)

- Files ending in

.gzare compressed (zipped) files.

- The

fastq.gzformat is a compressed version of afastqdataset.- The

fastqsanger.gzformat is a compressed version of thefastqsangerdatatype, etc.- Compression saves space (and therefore your quota).

- Tools can accept the compressed versions of input files

- Make sure the datatype (compressed or uncompressed) is correct for your files, or it may cause tool errors.

FASTQ files: `fastq` vs `fastqsanger` vs ..

FASTQ files come in various flavours. They differ in the encoding scheme they use. See our QC tutorial for a more detailed explanation of encoding schemes.

Nowadays, the most commonly used encoding scheme is sanger. In Galaxy, this is the

fastqsangerdatatype. If you are using older datasets, make sure to verify the FASTQ encoding scheme used in your data.Be Careful: choosing the wrong encoding scheme can lead to incorrect results!

Tip: There are 2 Galaxy datatypes that have similar names, but are not the same, please make sure you

fastqsangerandfastqcssanger(not the additionalcs).Tip: When in doubt, choose

fastqsanger

How do `fastq.gz` datasets relate to the `.fastqsanger` datatype metadata assignment?

Before assigning

fastqsangerorfastqsanger.gz, be sure to confirm the format.TIP:

- Using non-fastqsanger scaled quality values will cause scientific problems with tools that expected

fastqsangerformatted input.- Even if the tool does not fail, get the format right from the start to avoid problems. Incorrect format is still one of the most common reasons for tool errors or unexpected results (within Galaxy or not).

- For more information on How to format fastq data for tools that require .fastqsanger format?

How to format fastq data for tools that require .fastqsanger format?

- Most tools that accept FASTQ data expect it to be in a specific FASTQ version:

.fastqsanger. The.fastqsangerdatatype must be assigned to each FASTQ dataset.In order to do that:

- Watch the FASTQ Prep Illumina video for a complete walk-through.

- Run FastQC first to assess the type.

- Run FASTQ Groomer if the data needs to have the quality scores rescaled.

- If you are certain that the quality scores are already scaled to Sanger Phred+33 (the result of an Illumina 1.8+ pipeline), the datatype

.fastqsangercan be directly assigned. Click on the pencil icon galaxy-pencil to reach the Edit Attributes form. In the center panel, click on the “Datatype” tab, enter the datatype.fastqsanger, and save.- Run FastQC again on the entire dataset if any changes were made to the quality scores for QA.

Other tips

- If you are not sure what type of FASTQ data you have (maybe it is not Illumina?), see the help directly on the FASTQ Groomer tool for information about types.

- For Illumina, first run FastQC on a sample of your data (how to read the full report). The output report will note the quality score type interpreted by the tool. If not

.fastqsanger, run FASTQ Groomer on the entire dataset. If.fastqsanger, just assign the datatype.- For SOLiD, run NGS: Fastq manipulation → AB-SOLID DATA → Convert, to create a

.fastqcssangerdataset. If you have uploaded a color space fastq sequence with quality scores already scaled to Sanger Phred+33 (.fastqcssanger), first confirm by running FastQC on a sample of the data. Then if you want to double-encode the color space into psuedo-nucleotide space (required by certain tools), see the instructions on the tool form Fastq Manipulation for the conversion.- If your data is FASTA, but you want to use tools that require FASTQ input, then using the tool NGS: QC and manipulation → Combine FASTA and QUAL. This tool will create “placeholder” quality scores that fit your data. On the output, click on the pencil icon galaxy-pencil to reach the Edit Attributes form. In the center panel, click on the “Datatype” tab, enter the datatype

.fastqsanger, and save.

Identifying and formatting Tabular Datasets

Format help for Tabular/BED/Interval DatasetsA Tabular datatype is human readable and has tabs separating data columns. Please note that tabular data is different from comma separated data (.csv) and the common datatypes are:

.bed,.gtf,.interval, or.txt.

- Click the pencil icon galaxy-pencil to reach the Edit Attributes form.

- Change the datatype (3rd tab) and save.

- Label columns (1st tab) and save.

- Metadata will be assigned, then the dataset can be used.

- If the required input is a BED or Interval datatype, adjusting (

.tab→.bed,.tab→.interval) maybe possible using a combination of Text Manipulation tools, to create a dataset that matches required specifications.- Some tools require that BED format be followed, even if the datatype Interval (with less strict column ordering) is accepted on the tool form.

- These tools will fail, if they are run with malformed BED datasets or non-specific column assignments.

- Solution: reorganize the data to be in BED format and rerun.

Understanding Datatypes

- Allow Galaxy to detect the datatype during Upload, and adjust from there if needed.

- Tool forms will filter for the appropriate datatypes it can use for each input.

- Directly changing a datatype can lead to errors. Be intentional and consider converting instead when possible.

- Dataset content can also be adjusted (tools: Data manipulation) and the expected datatype detected. Detected datatypes are the most reliable in most cases.

- If a tool does not accept a dataset as valid input, it is not in the correct format with the correct datatype.

- Once a dataset’s content matches the datatype, and that dataset is repeatedly used (example: Reference annotation) use that same dataset for all steps in an analysis or expect problems. This may mean rerunning prior tools if you need to make a correction.

- Tip: Not sure what datatypes a tool is expecting for an input?

- Create a new empty history

- Click on a tool from the tool panel

- The tool form will list the accepted datatypes per input

- Warning: In some cases, tools will transform a dataset to a new datatype at runtime for you.

- This is generally helpful, and best reserved for smaller datasets.

- Why? This can also unexpectedly create hidden datasets that are near duplicates of your original data, only in a different format.

- For large data, that can quickly consume working space (quota).

- Deleting/purging any hidden datasets can lead to errors if you are still using the original datasets as an input.

- Consider converting to the expected datatype yourself when data is large.

- Then test the tool directly on converted data. If it works, purge the original to recover space.

Using compressed fastq data as tool inputs

- If the tool accepts

fastqinput, then.gzcompressed data assigned to the datatypefastq.gzis appropriate.- If the tool accepts

fastqsangerinput, then.gzcompressed data assigned to the datatypefastqsanger.gzis appropriate.- Using uncompressed

fastqdata is still an option with tools. The choice is yours.TIP: Avoid labeling compressed data with an uncompressed datatype, and the reverse. Jobs using mismatched datatype versus actual format will fail with an error.

Features

Using the Scratchbook to view multiple datasets

If you would like to view two or more datasets at once, you can use the Scratchbook feature in Galaxy:

- Click on the Scratchbook icon galaxy-scratchbook on the top menu bar.

- You should see a little checkmark on the icon now

- View galaxy-eye a dataset by clicking on the eye icon galaxy-eye to view the output

- You should see the output in a window overlayed over Galaxy

- You can resize this window by dragging the bottom-right corner

- Click outside the file to exit the Scratchbook

- View galaxy-eye a second dataset from your history

- You should now see a second window with the new dataset

- This makes it easier to compare the two outputs

- Repeat this for as many files as you would like to compare

- You can turn off the Scratchbook galaxy-scratchbook by clicking on the icon again

Why not use Excel?

Excel is a fantastic tool and a great place to build simple analysis models, but when it comes to scaling, Galaxy wins every time.You could just as easily use Excel to answer the same question, and if the goal is to learn how to use a tool, then either tool would be great! But what if you are working on a question where your analysis matters? Maybe you are working with human clinical data trying to diagnose a set of symptoms, or you are working on research that will eventually be published and maybe earn you a Nobel Prize?

In these cases your analysis, and the ability to reproduce it exactly, is vitally important, and Excel won’t help you here. It doesn’t track changes and it offers very little insight to others on how you got from your initial data to your conclusions.

Galaxy, on the other hand, automatically records every step of your analysis. And when you are done, you can share your analysis with anyone. You can even include a link to it in a paper (or your acceptance speech). In addition, you can create a reusable workflow from your analysis that others (or yourself) can use on other datasets.

Another challenge with spreadsheet programs is that they don’t scale to support next generation sequencing (NGS) datasets, a common type of data in genomics, and which often reach gigabytes or even terabytes in size. Excel has been used for large datasets, but you’ll often find that learning a new tool gives you significantly more ability to scale up, and scale out your analyses.

Histories

Copy a dataset between histories

Sometimes you may want to use a dataset in multiple histories. You do not need to re-upload the data, but you can copy datasets from one history to another.There 3 ways to copy datasets between histories

From the original history

- Click on the galaxy-gear icon (History options) on the top of the history panel

- Click on Copy Dataset

Select the desired files

Give a relevant name to the “New history”

- Click on the new history name in the green box that have just appear to switch to this history

From the galaxy-columns View all histories

- Click on galaxy-columns View all histories on the top right

- Switch to the history in which the dataset should be copied

- Drag the dataset to copy from its original history

- Drop it in the target history

From the target history

- Click on User in the top bar

- Click on Datasets

- Search for the dataset to copy

- Click on it

- Click on Copy to History

Creating a new history

Histories are an important part of Galaxy, most people use a new history for every new analysis. Always make sure to give your histories good names, so you can easily find your results back later.Click the new-history icon at the top of the history panel.

If the new-history is missing:

- Click on the galaxy-gear icon (History options) on the top of the history panel

- Select the option Create New from the menu

Downloading histories

- Click on the gear icon galaxy-gear on the top of the history panel.

- Select “Export History to File” from the History menu.

- Click on the “Click here to generate a new archive for this history” text.

- Wait for the Galaxy server to prepare history for download.

- Click on the generated link to download the history.

Find all Histories and purge (aka permanently delete)

- Login to your Galaxy account.

- On the top navigation bar Click on User.

- On the drop down menu that appears Click on Histories.

- Click on Advanced Search, additional fields will be displayed.

- Next to the Status field, click All, a list of all histories will be displayed.

- Check the box next to Name in the displayed list to select all histories.

- Click Delete Permanently to purge all histories.

- A pop up dialogue box will appear letting you know history contents will be removed and cannot be undone, then click OK to confirm.

Finding Histories

- To review all histories in your account, go to User > Histories in the top menu bar.

- At the top of the History listing, click on Advanced Search.

- Set the status to all to view all of your active, deleted, and permanently deleted (purged) histories.

- Histories in all states are listed for registered accounts. Meaning one will always find their data here if it ever appears to be “lost”.

- Note: Permanently deleted (purged) Histories may be fully removed from the server at any time. The data content inside the History is always removed at the time of purging (by a double-confirmed user action), but the purged History artifact may still be in the listing. Purged data content cannot be restored, even by an administrator.

Finding and working with "Histories shared with me"

How to find and work on histories shared with youTo find histories shared with me:

- Log into your account.

- Select User, in the drop-down menu, select Histories shared with me.

To work with shared histories:

- Import the History into your account via copying it to work with it.

- Unshare Histories that you no longer want shared with you or that you have already made a copy of.

Note: Shared Histories (when copied into your account or not) do count in portion toward your total account data quota usage. More details on histories shared concerning account quota usage can be found in this link.

How to set Data Privacy Features?

Privacy controls are only enabled if desired. Otherwise, datasets by defaults remain private and unlisted in Galaxy. This means that a dataset you’ve created is virtually invisible until you publish a link to it.

Below are three optional steps to setting private Histories, a user can make use of any of the options below depending on what the user want to achieve:

Changing the privacy settings of individual dataset.

- Click on the dataset name for a dropdown.

- Clicking the ‘pencil - galaxy-pencil icon

- Move on the Permissions tab.

- On the permission tab is two input tab

- On the second input with a label of access

- Search for the name of the user to grant permission

- Click on save permission

Note: Adding additional roles to the ‘access’ permission along with your “private role” does not do what you may expect. Since roles are always logically added together, only you will be able to access the dataset, since only you are a member of your “private role”.

Make all datasets in the current history private.

- Open the History Options galaxy-gear menu galaxy-gear at the top of your history panel

- Click the Make Private option in the dropdown menu available

- Sets the default settings for all new datasets in this history to private.

Set the default privacy settings for new histories

- Click user button on top of the main channel for a dropdown galaxy-dropdown

- Click on the preferences under the dropdown galaxy-dropdown

- Select Set Dataset Permissions for New Histories icon cofest

- Add a permission and click save permission

Note: Changes made here will only affect histories created after these settings have been stored.

Importing a history

- Open the link to the shared history

- Click on the new-history Import history button on the top right

- Enter a title for the new history

- Click on Import

Renaming a history

- Click on Unnamed history (or the current name of the history) (Click to rename history) at the top of your history panel

- Type the new name

- Press Enter

Searching your history

To make it easier to find datasets in large histories, you can filter your history by keywords as follows:

Click on the search datasets box at the top of the history panel.

- Type a search term in this box

- For example a tool name, or sample name

- To undo the filtering and show your full history again, press on the clear search button galaxy-clear next to the search box

Sharing your History

You can share your work in Galaxy. There are various ways you can give access one of your histories to other users.Sharing your history allows others to import and access the datasets, parameters, and steps of your history.

- Share via link

- Open the History Options galaxy-gear menu (gear icon) at the top of your history panel

- galaxy-toggle Make History accessible

- A Share Link will appear that you give to others

- Anybody who has this link can view and copy your history

- Publish your history

- galaxy-toggle Make History publicly available in Published Histories

- Anybody on this Galaxy server will see your history listed under the Shared Data menu

- Share only with another user.

- Click the Share with a user button at the bottom

- Enter an email address for the user you want to share with

- Your history will be shared only with this user.

- Finding histories others have shared with me

- Click on User menu on the top bar

- Select Histories shared with me

- Here you will see all the histories others have shared with you directly

Note: If you want to make changes to your history without affecting the shared version, make a copy by going to galaxy-gear History options icon in your history and clicking Copy

Transfer entire histories from one Galaxy server to another

- Click on galaxy-gear in the history panel of the sender Galaxy server

- Click on Export to File

- Select either exporting history to a link or to a remote file

- Click on the link text to generate a new archive for the history if exporting to a link

- Wait for the link to generate

- Copy the link address or click on the generated link to download the history archive

- Click on User on the top menu of the receiver Galaxy server

- Click on Histories to view saved histories

- Click on Import history in the grey button on the top right

- Select the appropriate importing method based on the choices made in steps 3 and 6

- Choose Export URL from another galaxy instance if link address was copied in step 6

- Select Upload local file from your computer if history archive was downloaded in step 6

- Choose Select a remote file if history was exported to a remote file in step 3

- Click the link text to check out your histories if import is successful

If history being transferred is too large, you may:

- Click on galaxy-gear in the history panel of the sender Galaxy server

- Click Copy Datasets to move just the important datasets into a new history

- Create the archive from that smaller history

Undeleting history

Undelete your deleted histories

- Click on User then select Histories

- Click on Advanced search on the top left side below Saved Histories

- On Status click Deleted

- Select the history you want to undelete using the checkbox on the left side

- Click Undelete button below the deleted histories

Unsharing unwanted histories

- All account Histories owned by others but shared with you can be reviewed under User > Histories shared with me.

- The other person does not need to unshare a history with you. Unshare histories yourself on this page using the pull-down menu per history.

- Dataset and History privacy options, including sharing, can be set under User > Preferences.

Three key features to work with shared data are:

- View is a review feature. The data cannot be worked with, but many details, including tool and dataset metadata/parameters, are included.

- Copy those you want to work with. This will increase your quota usage. This will also allow you to manipulate the datasets or the history independently from the original owner. All History/Dataset functions are available if the other person granted full access to the datasets to you.

- Unshare any on the list not needed anymore. After a history is copied, you will still have your version of the history, even if later unshared or the other person who shared it with you changes their version later. Meaning, that each account’s version of a History and the Datasets in it are distinct (unless the Datasets were not shared, you will still only be able to “view” but not work with or download them).

Note: “Histories shared with me” result in only a tiny part of your quota usage. Unsharing will not significantly reduce quota usage unless hundreds (or more!) or many significant histories are shared. If you share a History with someone else, that does not increase or decrease your quota usage.

Interactive tools

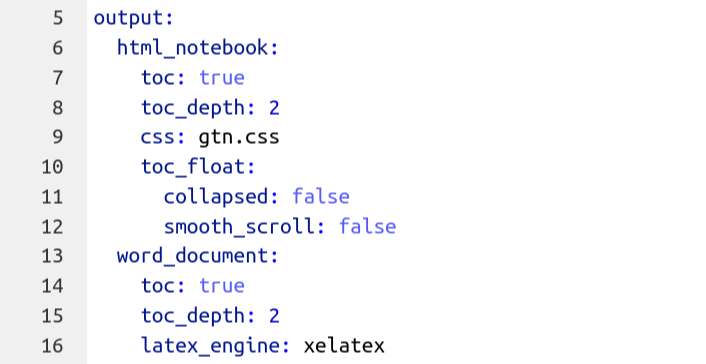

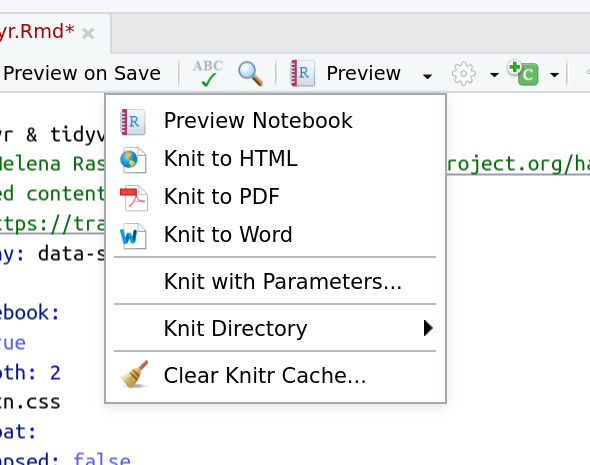

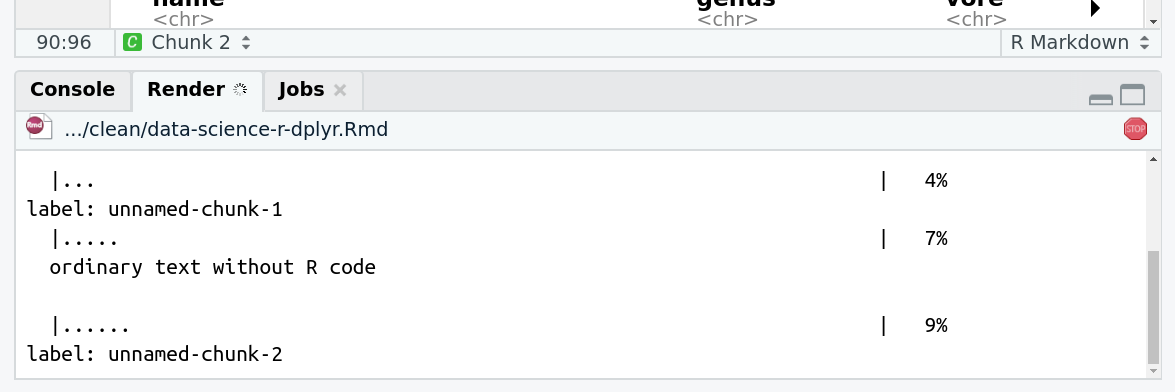

Knitting RMarkdown documents in RStudio

Hands-on: Knitting RMarkdown documents in RStudioOne of the other nice features of RMarkdown documents is making lovely presentation-quality worthy documents. You can take, for example, a tutorial and produce a nice report like output as HTML, PDF, or

.docdocument that can easily be shared with colleagues or students.

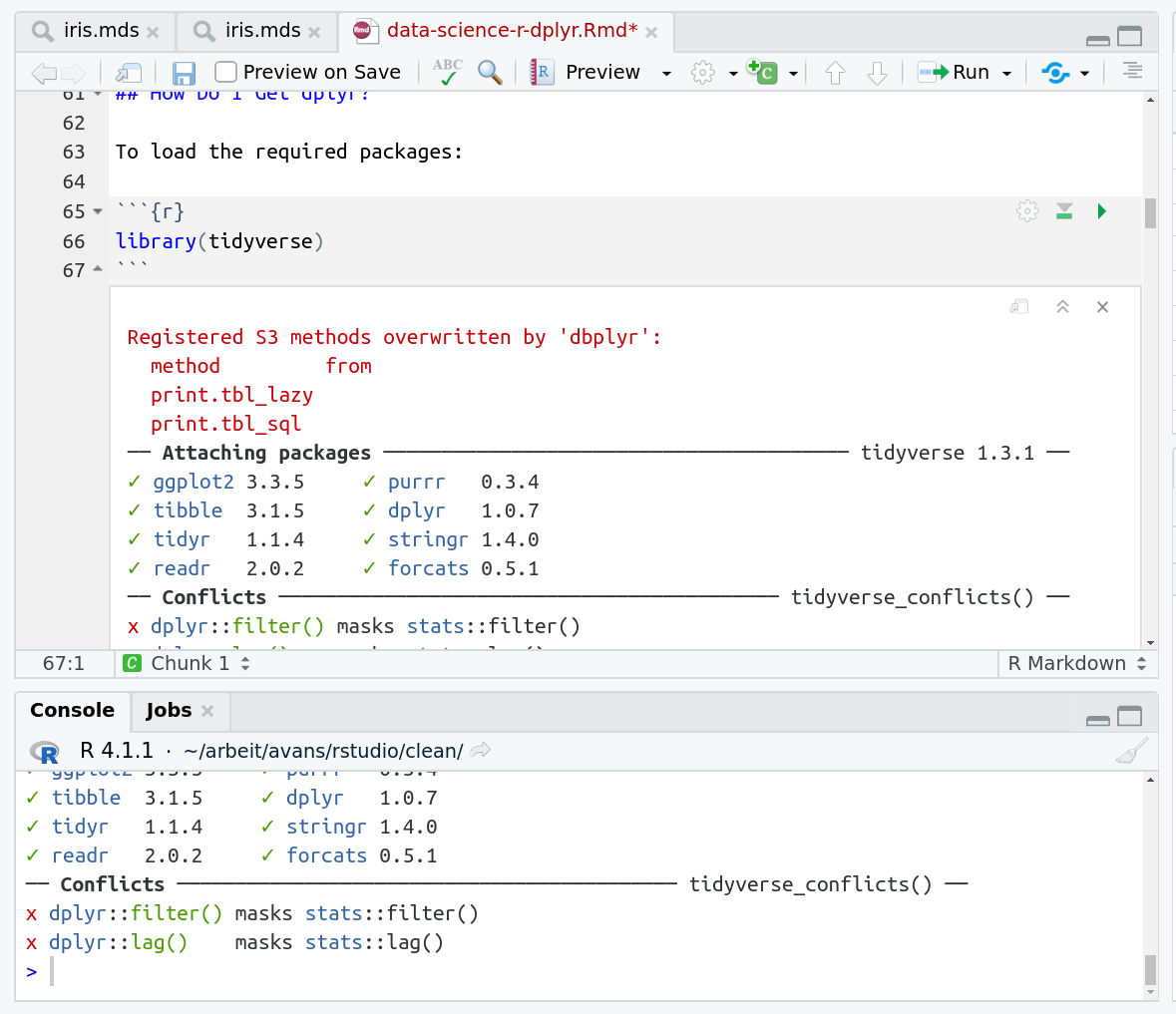

Now you’re ready to preview the document:

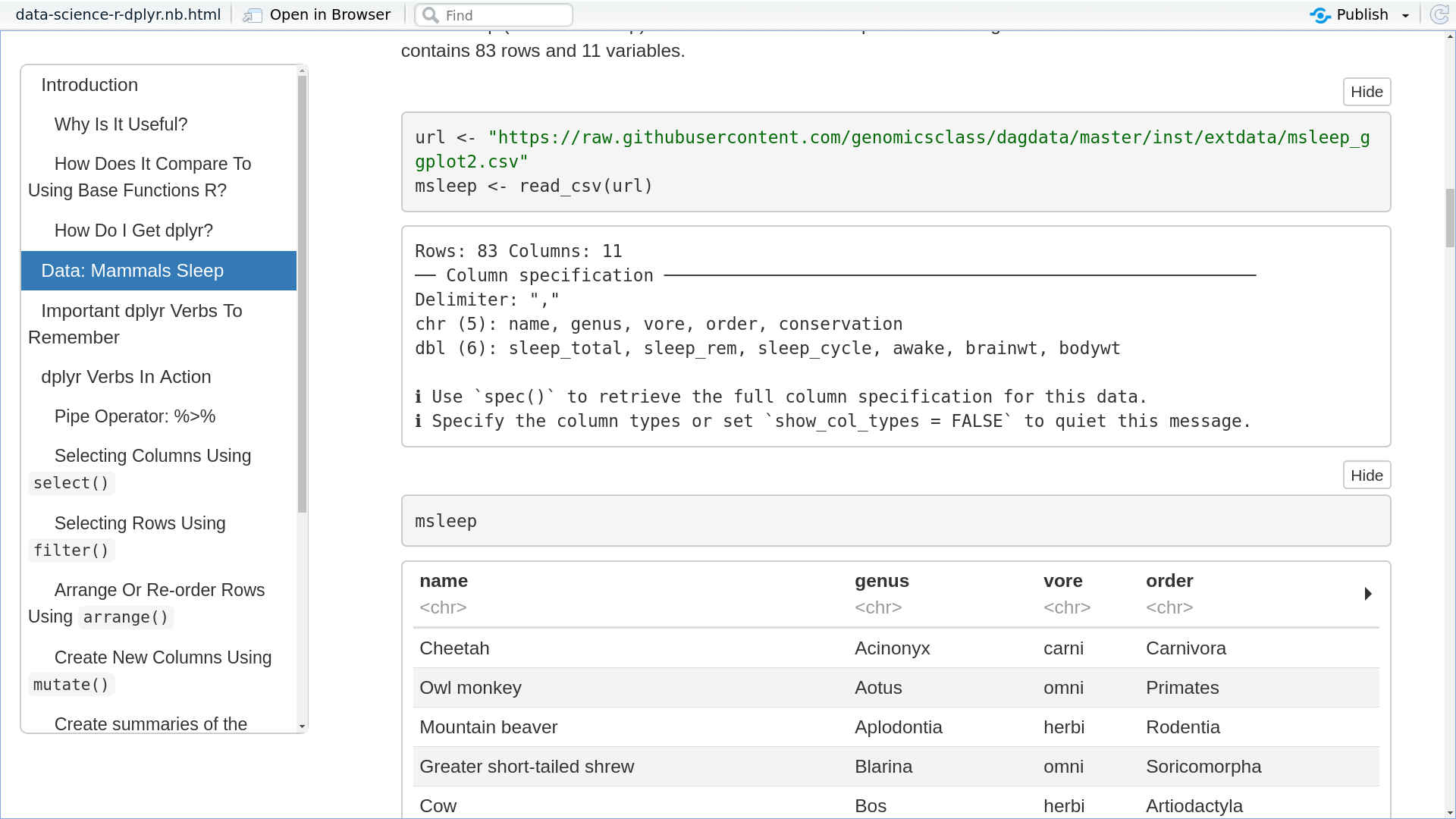

Click Preview. A window will popup with a preview of the rendered verison of this document.

The preview is really similar to the GTN rendering, no cells have been executed, and no output is embedded yet in the preview document. But if you have run cells (e.g. the first few loading a library and previewing the

msleepdataset:

When you’re ready to distribute the document, you can instead use the

Knitbutton. This runs every cell in the entire document fresh, and then compiles the outputs together with the rendered markdown to produce a nice result file as HTML, PDF, or Word document.

tip Tip: PDF + Word require a LaTeX installation

You might need to install additional packages to compile the PDF and Word document versions

And at the end you can see a pretty document rendered with all of the output of every step along the way. This is a fantastic way to e.g. distribute read-only lesson materials to students, if you feel they might struggle with using an RMarkdown document, or just want to read the output without doing it themselves.

Launch JupyterLab

Hands-on: Launch JupyterLabtip Tip: Launch JupyterLab in Galaxy

Currently JupyterLab in Galaxy is available on Live.useGalaxy.eu, usegalaxy.org and usegalaxy.eu.

hands_on Hands-on: Run JupyterLab

- Interactive Jupyter Notebook Tool: interactive_tool_jupyter_notebook :

- Click Execute

- The tool will start running and will stay running permanently

- Click on the User menu at the top and go to Active Interactive Tools and locate the JupyterLab instance you started.

- Click on your JupyterLab instance

tip Tip: Launch Try JupyterLab if not available on Galaxy

If JupyterLab is not available on the Galaxy instance:

- Start Try JupyterLab

Launch RStudio

Hands-on: Launch RStudioDepending on which server you are using, you may be able to run RStudio directly in Galaxy. If that is not available, RStudio Cloud can be an alternative.

Launch RStudio in Galaxy Currently RStudio in Galaxy is only available on UseGalaxy.eu and UseGalaxy.org

- Open the Rstudio tool tool by clicking here to launch RStudio

- Click Execute

- The tool will start running and will stay running permanently

- Click on the “User” menu at the top and go to “Active InteractiveTools” and locate the RStudio instance you started.

Launch RStudio Cloud if not available on Galaxy If RStudio is not available on the Galaxy instance:

- Register for RStudio Cloud, or login if you already have an account

- Create a new project

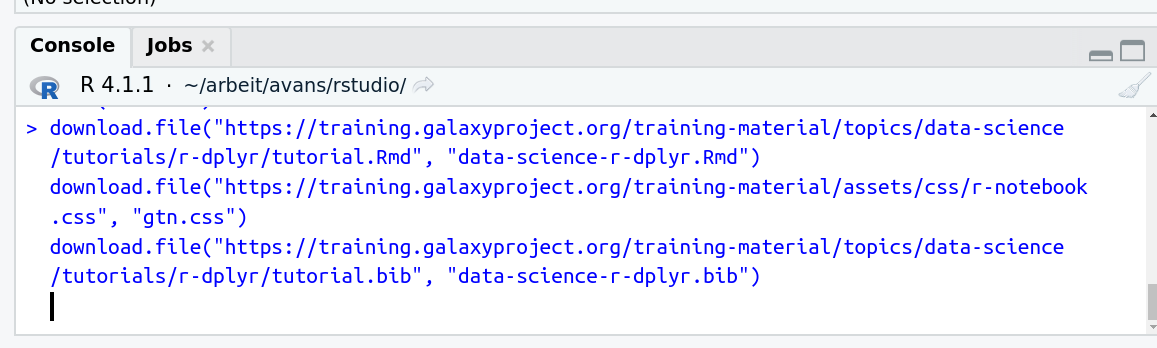

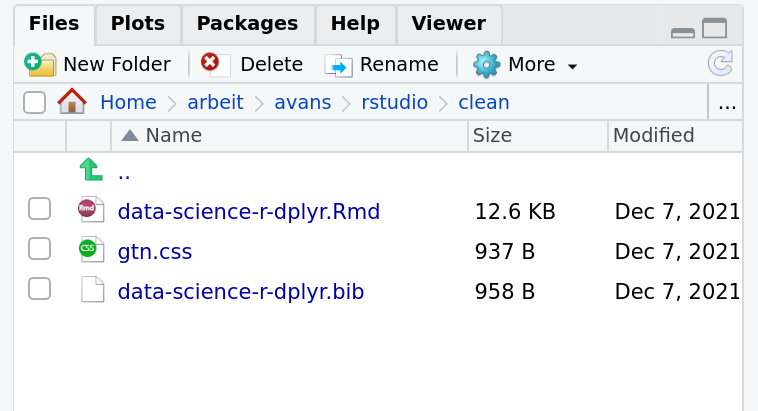

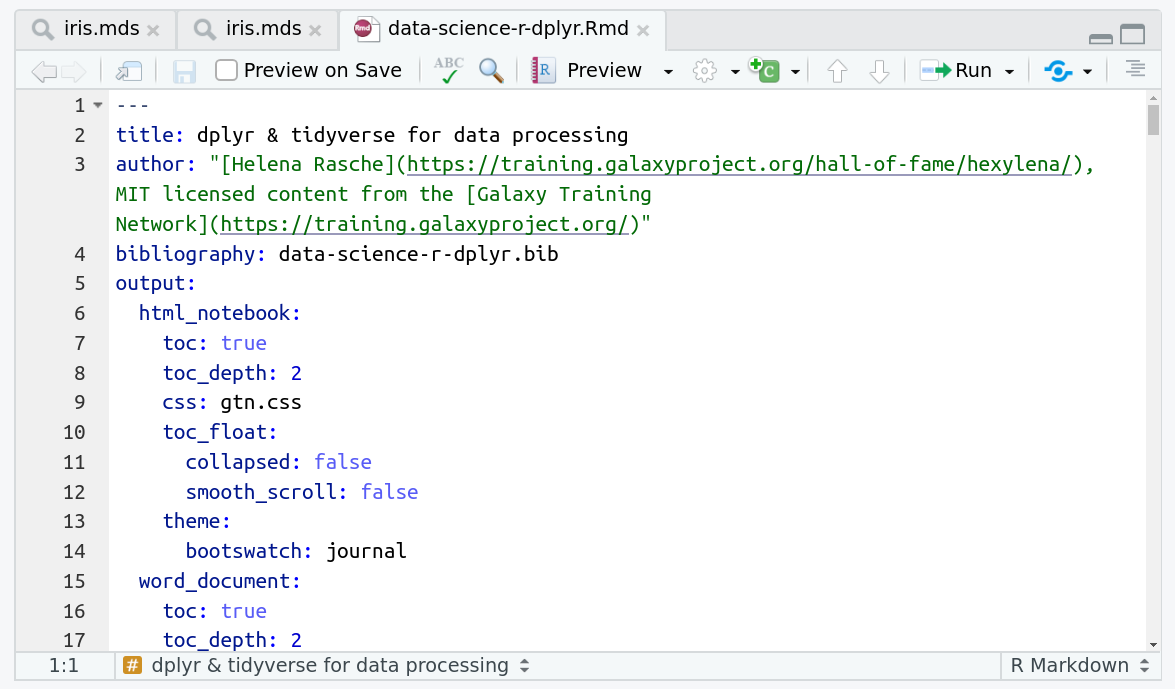

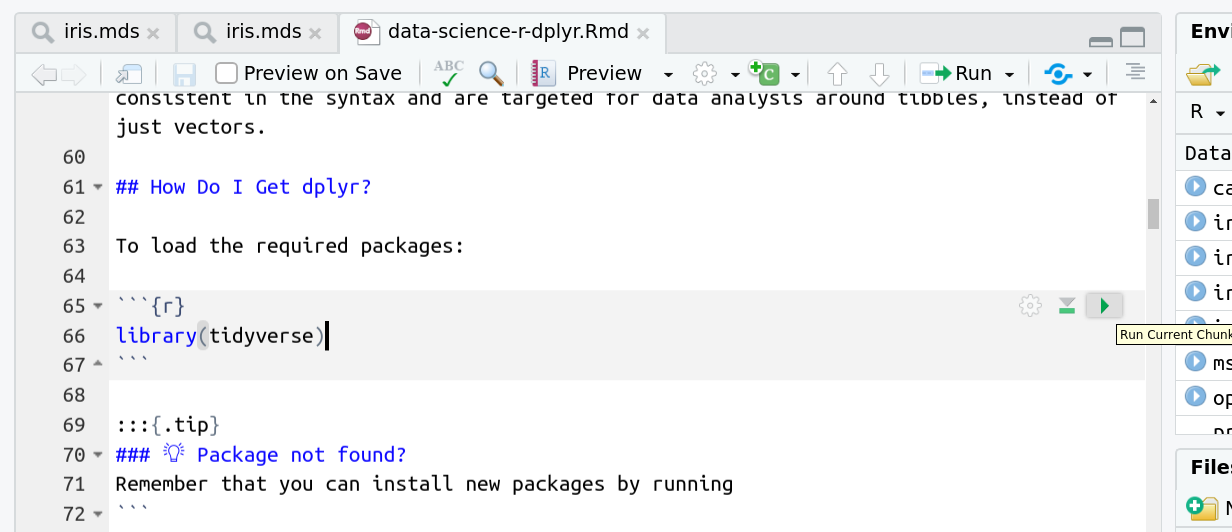

Learning with RMarkdown in RStudio

Hands-on: Learning with RMarkdown in RStudioLearning with RMarkdown is a bit different than you might be used to. Instead of copying and pasting code from the GTN into a document you’ll instead be able to run the code directly as it was written, inside RStudio! You can now focus just on the code and reading within RStudio.

Load the notebook if you have not already, following the tip box at the top of the tutorial

Open it by clicking on the

.Rmdfile in the file browser (bottom right)

The RMarkdown document will appear in the document viewer (top left)

You’re now ready to view the RMarkdown notebook! Each notebook starts with a lot of metadata about how to build the notebook for viewing, but you can ignore this for now and scroll down to the content of the tutorial.

You’ll see codeblocks scattered throughout the text, and these are all runnable snippets that appear like this in the document:

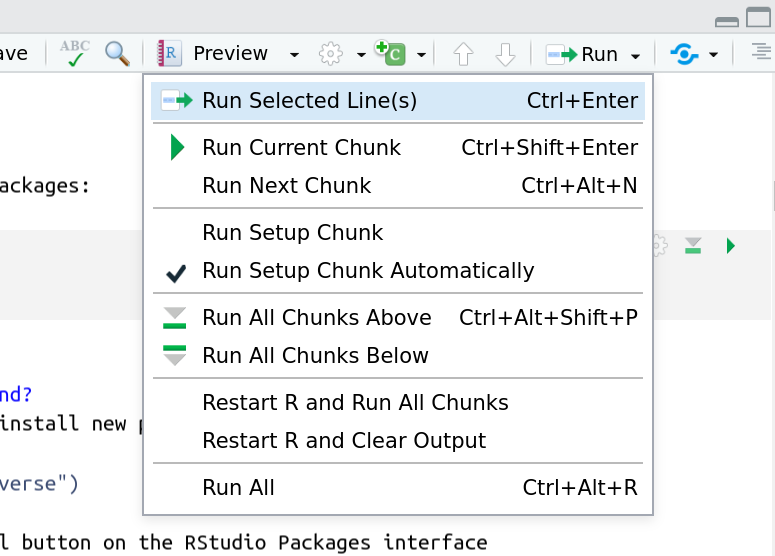

And you have a few options for how to run them:

- Click the green arrow

- ctrl+enter

Using the menu at the top to run all

When you run cells, the output will appear below in the Console. RStudio essentially copies the code from the RMarkdown document, to the console, and runs it, just as if you had typed it out yourself!

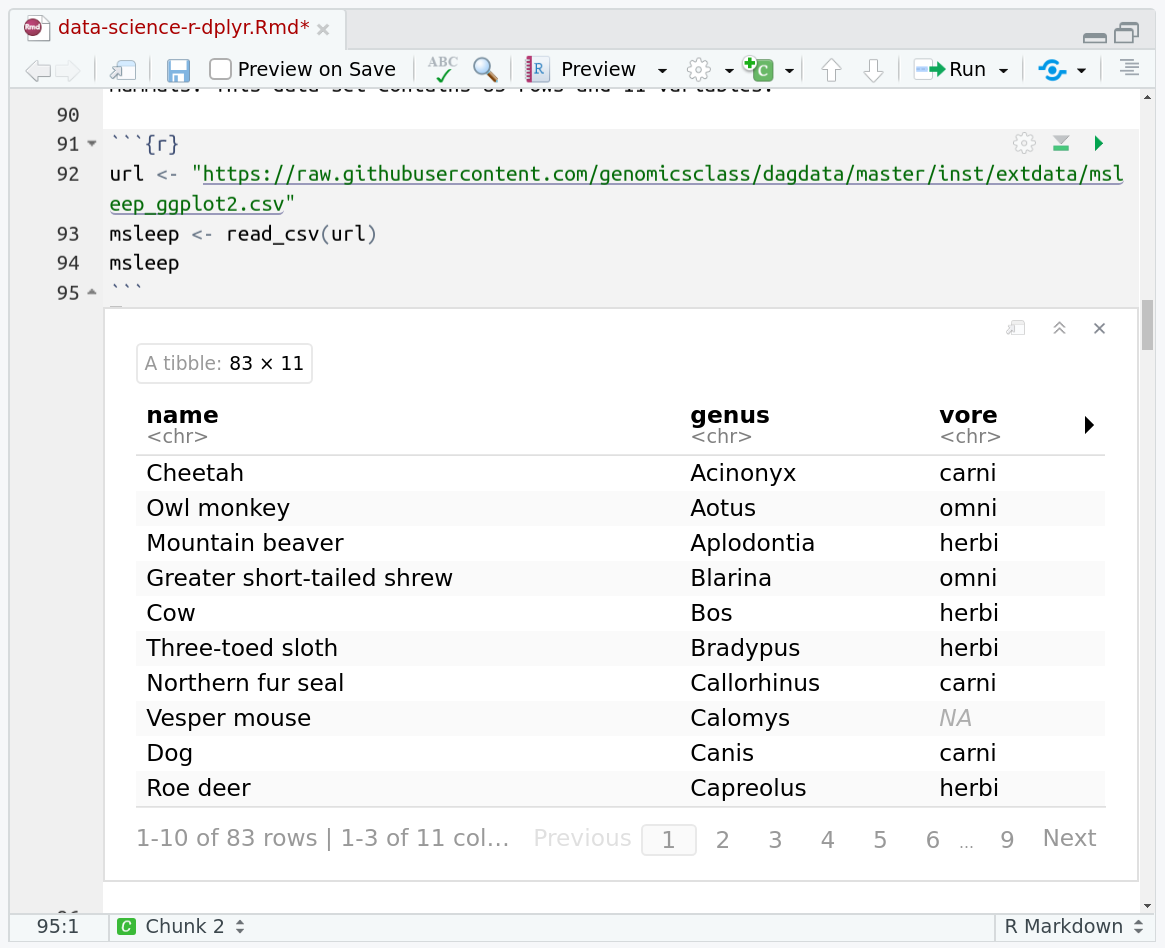

One of the best features of RMarkdown documents is that they include a very nice table browser which makes previewing results a lot easier! Instead of needing to use

headevery time to preview the result, you get an interactive table browser for any step which outputs a table.

Open a Terminal in Jupyter

Hands-on: Open a Terminal in JupyterThis tutorial will let you accomplish almost everything from this view, running code in the cells below directly in the training material. You can choose between running the code here, or opening up a terminal tab in which to run it.Here are some instructions for how to do this on various environments.

Jupyter on UseGalaxy.* and MyBinder.org

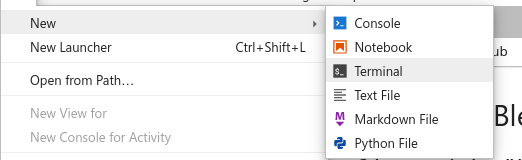

Use the File → New → Terminal menu to launch a terminal.

Disable “Simple” mode in the bottom left hand corner, if it activated.

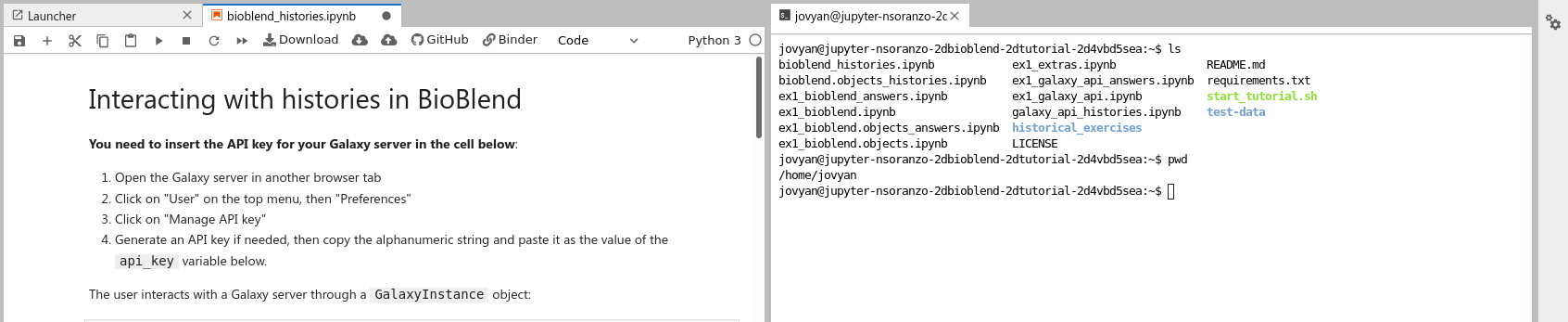

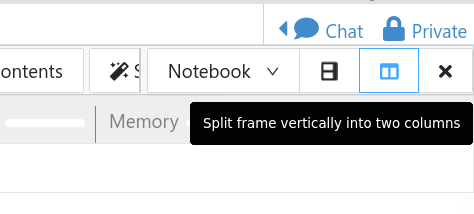

Drag one of the terminal or notebook tabs to the side to have the training materials and terminal side-by-side

CoCalc

Use the Split View functionality of cocalc to split your view into two portions.

Change the view of one panel to a terminal

Open interactive tool

- Go to User > Active InteractiveTools

- Wait for the to be running (Job Info)

- Click on

Stop RStudio

Hands-on: Stop RStudioWhen you have finished your R analysis, it’s time to stop RStudio.

- First, save your work into Galaxy, to ensure reproducibility:

- You can use

gx_put(filename)to save individual files by supplying the filename- You can use

gx_save()to save the entire analysis transcript and any data objects loaded into your environment.- Once you have saved your data, you can proceed in 2 different ways:

- Deleting the corresponding history dataset named

RStudioand showing a “in progress state”, so yellow, OR- Clicking on the “User” menu at the top and go to “Active InteractiveTools” and locate the RStudio instance you started, selecting the corresponding box, and finally clicking on the “Stop” button at the bottom.

Reference genomes

How to use Custom Reference Genomes?

A reference genome contains the nucleotide sequence of the chromosomes, scaffolds, transcripts, or contigs for single species. It is representative of a specific genome build or release. There are two options to use reference genomes in Galaxy: native (provided by the server administrators and used by most of the tools) and custom (uploaded by users in FASTA format).

There are five basic steps to use a Custom Reference Genome:

- Obtain a FASTA copy of the target genome.

- Use FTP to upload the genome to Galaxy and load into a history as a dataset.

- Clean up the format with the tool NormalizeFasta using the options to wrap sequence lines at 80 bases and to trim the title line at the first whitespace.

- Make sure the chromosome identifiers are a match for other inputs.

- Set a tool form’s options to use a custom reference genome from the history and select the loaded genome.

Sorting Reference Genome

Certain tools expect that reference genomes are sorted in lexicographical order. These tools are often downstream of the initial mapping tools, which means that a large investment in a project has already been made, before a problem with sorting pops up in conclusion layer tools. How to avoid? Always sort your FASTA reference genome dataset at the beginning of a project. Many sources only provide sorted genomes, but double checking is your own responsibility, and super easy in Galaxy!

- Convert Formats -> FASTA-to-Tabular

- Filter and Sort -> Sort on column: c1 with flavor: Alphabetical everything in: Ascending order

- Convert Formats -> Tabular-to-FASTA

Note: The above sorting method is for most tools, but not all. In particular, GATK tools have a tool-specific sort order requirement.

Troubleshooting Custom Genome fasta

If a custom genome/transcriptome/exome dataset is producing errors, double check the format and that the chromosome identifiers between ALL inputs. Clicking on the bug icon galaxy-bug will often provide a description of the problem. This does not automatically submit a bug report, and it is not always necessary to do so, but it is a good way to get some information about why a job is failing.

Custom genome not assigned as FASTA format

- Symptoms include: Dataset not included in custom genome “From history” pull down menu on tool forms.

- Solution: Check datatype assigned to dataset and assign fasta format.

- How: Click on the dataset’s pencil icon galaxy-pencil to reach the “Edit Attributes” form, and in the Datatypes tab > redetect the datatype.

- If

fastais not assigned, there is a format problem to correct.Incomplete Custom genome file load

- Symptoms include: Tool errors result the first time you use the Custom genome.

- Solution: Use Text Manipulation → Select last lines from a dataset to check last 10 lines to see if file is truncated.

- How: Reload the dataset (switch to FTP if not using already). Check your FTP client logs to make sure the load is complete.

Extra spaces, extra lines, inconsistent line wrapping, or any deviation from strict FASTA format

- Symptoms include: RNA-seq tools (Cufflinks, Cuffcompare, Cuffmerge, Cuffdiff) fails with error

Error: sequence lines in a FASTA record must have the same length!.- Solution: File tested and corrected locally then re-upload or test/fix within Galaxy, then re-run.

- How:

- Quick re-formatting Run the tool through the tool NormalizeFasta using the options to wrap sequence lines at 80 bases and to trim the title line at the first whitespace.

- Optional Detailed re-formatting Start with FASTA manipulation → FASTA Width formatter with a value between 40-80 (60 is common) to reformat wrapping. Next, use Filter and Sort → Select with “>” to examine identifiers. Use a combination of Convert Formats → FASTA-to-Tabular, Text Manipulation tools, then Tabular-to-FASTA to correct.

- With either of the above, finish by using Filter and Sort → Select with

^\w*$to search for empty lines (use “NOT matching” to remove these lines and output a properly format fasta dataset).Inconsistent line wrapping, common if merging chromosomes from various Genbank records (e.g. primary chroms with mito)

- Symptoms include: Tools (SAMTools, Extract Genomic DNA, but rarely alignment tools) may complain about unexpected line lengths/missing identifiers. Or they may just fail for what appears to be a cluster error.

- Solution: File tested and corrected locally then re-upload or test/fix within Galaxy.

- How: Use NormalizeFasta using the options to wrap sequence lines at 80 bases and to trim the title line at the first whitespace. Finish by using Filter and Sort → Select with

^\w*$to search for empty lines (use “NOT matching” to remove these lines and output a properly format fasta dataset).Unsorted fasta genome file

- Symptoms include: Tools such as Extract Genomic DNA report problems with sequence lengths.

- Solution: First try sorting and re-formatting in Galaxy then re-run.

- How: To sort, follow instructions for Sorting a Custom Genome.

Identifier and Description in “>” title lines used inconsistently by tools in the same analysis

- Symptoms include: Will generally manifest as a false genome-mismatch problem.

- Solution: Remove the description content and re-run all tools/workflows that used this input. Mapping tools will usually not fail, but downstream tools will. When this comes up, it usually means that an analysis needs to be started over from the mapping step to correct the problems. No one enjoys redoing this work. Avoid the problems by formatting the genome, by double checking that the same reference genome was used for all steps, and by making certain the ‘identifiers’ are a match between all planned inputs (including reference annotation such as GTF data) before using your custom genome.

- How: To drop the title line description content, use NormalizeFasta using the options to wrap sequence lines at 80 bases and to trim the title line at the first whitespace. Next, double check that the chromosome identifiers are an exact match between all inputs.

Unassigned database

- Symptoms include: Tools report that no build is available for the assigned reference genome.

- Solution: This occurs with tools that require an assigned database metadata attribute. SAMTools and Picard often require this assignment.

- How: Create a Custom Build and assign it to the dataset.

Sequencing

Illumina MiSeq sequencing

Comment: Illumina MiSeq sequencingIllumina MiSeq sequencing is based on sequencing by synthesis. As the namesuggests, fluorescent labels are measured for every base that bind at aspecific moment at a specific place on a flow cell. These flow cells arecovered with oligos (small single strand DNA strands). In the librarypreparation the DNA strands are cut into small DNA fragments (differs perkit/device) and specific pieces of DNA (adapters) are added, which arecomplementary to the oligos. Using bridge amplification large amounts ofclusters of these DNA fragments are made. The reverse string is washed away,making the clusters single stranded. Fluorescent bases are added one by one,which emit a specific light for different bases when added. This is happeningfor whole clusters, so this light can be detected and this data is basecalled(translation from light to a nucleotide) to a nucleotide sequence (Read). Forevery base a quality score is determined and also saved per read. Thisprocess is repeated for the reverse strand on the same place on the flowcell, so the forward and reverse reads are from the same DNA strand. Theforward and reversed reads are linked together and should always be processedtogether!

For more information watch this video from Illumina

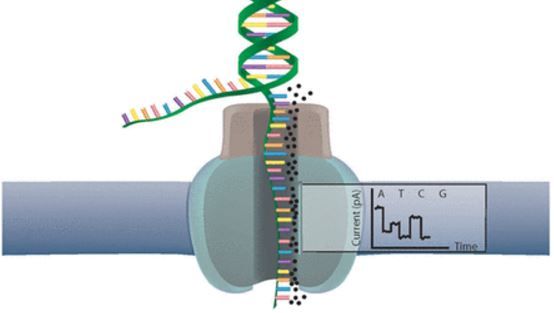

Nanopore sequencing

Comment: Nanopore sequencingNanopore sequencing has several properties that make it well-suited for our purposes

- Long-read sequencing technology offers simplified and less ambiguous genome assembly

- Long-read sequencing gives the ability to span repetitive genomic regions

- Long-read sequencing makes it possible to identify large structural variations

When using Oxford Nanopore Technologies (ONT) sequencing, the change inelectrical current is measured over the membrane of a flow cell. Whennucleotides pass the pores in the flow cell the current change is translated(basecalled) to nucleotides by a basecaller. A schematic overview is given inthe picture above.

When sequencing using a MinIT or MinION Mk1C, the basecalling software ispresent on the devices. With basecalling the electrical signals are translatedto bases (A,T,G,C) with a quality score per base. The sequenced DNA strand willbe basecalled and this will form one read. Multiple reads will be stored in afastq file.

Support

Contacting Galaxy Administrators

If you suspect there is something wrong with the server, or would like to request a tool to be installed, you should contact the server administrators for the Galaxy you are on.

- Tool error? Please follow these troubleshooting steps

- Each Galaxy server has different contact procedures, here are the contact options for the 3 biggest servers:

- Galaxy US: Email, Gitter channel

- Galaxy EU: Gitter channel, Request TIaaS

- Galaxy AU: Email, Request a tool, Request Data Quota

- Other Galaxy servers? Check the homepage for more information.

Where do I get more support?

If you need support for using Galaxy, running your analysis or completing a tutorial, please try one of the following options:

- Gitter Chat: You can get help on Gitter chat platform, on various channels.

Galaxy Help Forum: You can also have a look at the Galaxy Help Forum. Your question may already have been answered here before. If not, you can post your question here.

- Contact Server Admins: If you think there is a problem with the Galaxy server, or you would like to make a request, contact the Galaxy server administrators.

Tools

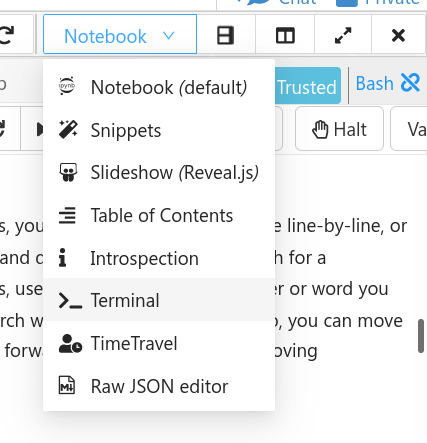

Changing the tool version

Tools are frequently updated to new versions. Your Galaxy may have multiple versions of the same tool available. By default, you will be shown the latest version of the tool.

Switching to a different version of a tool:

- Open the tool

- Click on the tool-versions versions logo at the top right

- Select the desired version from the dropdown list

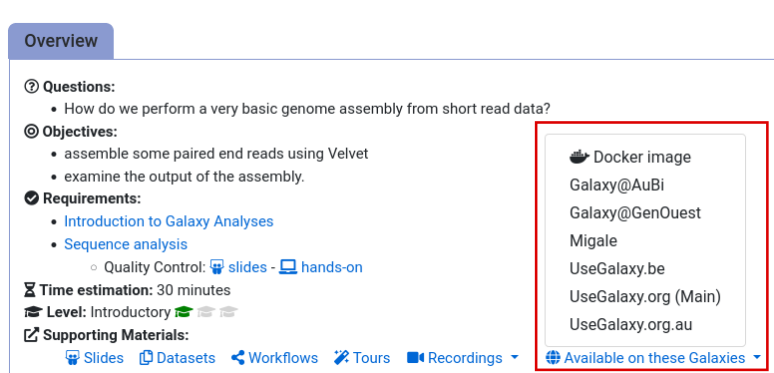

If a Tool is Missing

To use the tools installed and available on the Galaxy server:

- At the top of the left tool panel, type in a tool name or datatype into the tool search box.

- Shorter keywords find more choices.

- Tools can also be directly browsed by category in the tool panel.

If you can’t find a tool you need for a tutorial on Galaxy, please:

- Check that you are using a compatible Galaxy server

- Navigate to the overview box at the top of the tutorial

- Find the “Supporting Materials” section

- Check “Available on these Galaxies”

- If your server is not listed here, the tutorial is not supported on your Galaxy server

- You can create an account on one of the supporting Galaxies

- Use the Tutorial mode feature

- Open your Galaxy server

- Click on the curriculum icon on the top menu, this will open the GTN inside Galaxy.

- Navigate to your tutorial

- Tool names in tutorials will be blue buttons that open the correct tool for you

- Note: this does not work for all tutorials (yet)