Design and plan session, course, materials

OverviewQuestions:Objectives:

Is the structured approach to course design?

How to articulate learning outcomes commensurate with the cognitive complexity of the target learning?

How to devise learning experiences and course content?

What are the steps that can help trainers devise and deploy effective courses?

Requirements:

List five key phases of curriculum & course development

Explain the primary role of learning outcomes

Write learning outcomes for a course

Identify the Bloom’s-level accomplishments that different types of learning experience are likely to support

Describe the role of learning outcomes in selecting relevant content

Distinguish different types of assessment & their role in supporting learner progression towards learning outcomes

Summarise the benefits of course evaluation

- Contributing to the Galaxy Training Material

- Principles of learning and how they apply to training and teaching: tutorial hands-on

Time estimation: 60 minutesSupporting Materials:Last modification: Oct 18, 2022

Introduction

Teaching and training, core elements of academic life, can be enormously rewarding but also quite challenging. Instructors are often required to perform under various constraints, and frequently have to accommodate, engage and motivate student cohorts with very different backgrounds and aptitudes in limited time-frames. This can be daunting for experienced teachers, but especially so for those who’re relatively new to teaching and training.

Comment: Definitions of key termsTraining: instruction delivered via short courses designed to expand or build knowledge & practical skills in a given field, often conducted in the workplace or training centre to ‘up-skill’ members of a workforce

Teaching: usually, instruction delivered over long time-scales via a series of courses (in schools, colleges, universities, etc.) designed to contribute to a formal programme that, if completed successfully, yields an accredited qualification in a given field (e.g., a degree)

Formal education enterprises generally begin with curriculum (for the Development of Vocational Training 2008) design: this involves specifying

- its purpose or Teaching Goals (TGs);

- its duration;

- the Knowledge, Skills and Abilities (KSAs) intended to be achieved, expressed as a set of Learning Outcomes (LOs);

- how learners will demonstrate achievement of those LOs;

- the materials, Learning Experiences (LEs) and assignments instructors will use to support learner progression towards the LOs;

- the assessments for evaluating student learning and teaching effectiveness.

Comment: Definitions of key termsCurriculum: the inventory of tasks involving the design, organisation & planning of an education or training enterprise, including specification of learning outcomes, content, materials & assessments, & arrangements for training teachers & trainers

Knowledge, Skills and Abilities (KSAs): list of special qualifications and personal attributes that learners should have after a training

- Knowledge: the subjects, topics, and items of information that learners should know

- Skills: technical or manual proficiencies which are usually learned or acquired through training.

- Abilities: the present demonstrable capacity to apply several knowledge and skills simultaneously in order to complete a task or perform an observable behaviour.

Learning Outcomes (LOs): the KSAs that learners should be able to demonstrate after instruction, the tangible evidence that the teaching goals have been achieved; LOs are learner-centric

Learning Experience (LE): any setting or interaction in or via which learning takes place: e.g., a lecture, game, exercise, role-play, etc.

Teaching Goal (TG): the intentions of an instructor regarding the purpose of a curriculum/course/lesson/activity/set of materials; TGs are instructor-centric (also termed instructional objectives)

Emphasis is placed on teaching and learning, and mechanisms for collecting evidence that learners have changed over time (Tractenberg et al. 2020). In this latter sense, the concept of ‘curriculum’ differentiates formal teaching from training, as formal programmes usually afford time for learners both to be able to progress and to demonstrate their progression.

By contrast, training courses are much shorter (measured in days or weeks, rather than years); they hence necessarily focus on acquiring specific KSAs in limited time-frames, generally without consideration of learner progression beyond the course. However, the essential features of effective curricula (i.e., those that achieve their stated LOs for the majority of learners) pertain to instruction on any time-scale; they are thus also relevant for short courses, and provide important considerations for those involved in, or embarking upon, course design (whether face-to-face or online).

With this in mind, this tutorial outlines key steps of curriculum development – and the role of Bloom’s taxonomy (Bloom 1956) – that can be used to inform the design of effective courses.

Comment: SourcesThis tutorial is significantly based on Via et al. 2020, one session of ELIXIR-GOBLET Train the Trainer curriculum and Tractenberg et al. 2020

Hands-on: Select a topic for a 3 minute training - _⏰ 1 min - Silent reflection_

Choose a topic for a 3 minutes training related to Galaxy

Examples:

- Introduction to Galaxy interface

- How to upload data to Galaxy

- Galaxy Training Network

- Identify the target audience

- Identify the prerequisites

For more details about how learning works and values, check our tutorial

AgendaIn this tutorial, we will cover:

Formal curriculum design

Different types of curriculum

To set the scene for our considerations of course design, we examine some of the foundations for effective curriculum development, drawing heavily on the curriculum- and course- development guidelines developed by Tractenberg et al. 2020. Notable here is the fact that different types of curriculum have been defined: i.e., intended, implemented, attained and hidden curricula. Recognising the existence of different curriculum types (or, perhaps, different curricular outcomes) is important because, while the intended curriculum is the starting point, it may not be the curriculum actually attained: i.e., what you aimed to teach and what students actually learned may not be the same.

To improve outcomes, differences between the intended and attained curricula need to be minimised. The only way to discover the attained curriculum is to find out what learning actually occurred. This requires actionable evaluation, to assess whether the teaching goals (TGs) and learning outcomes (LOs) were achieved, to identify weaknesses in the implementation and to highlight improvements needed to remediate them. These considerations are key to developing effective curricula and courses.

Comment: Definitions of key termsActionable: supportive of a decision, or the taking of some action by a learner, instructor or institution

Attained curriculum: what learners actually acquire & can demonstrate having followed the implemented curriculum (“UNESCO IBE Glossary of Curriculum-Related Terminology”)

Hidden curriculum: unintended curricular effects: unofficial norms, behaviours & values that are transferred (not necessarily consciously) by the school culture or ethos; this recognises that schooling happens in broad social & cultural environments that influence learning (“The glossary of Education Reform”)

Implemented curriculum: or taught curriculum, how the intended curriculum is delivered in practice: i.e., the teaching & learning activities, & the interactions between learners & teachers, & among learners

Intended curriculum: the formal specification of KSAs that students are expected to achieve & be able to demonstrate having followed the implemented curriculum

Hands-on: Define audience and teaching goal - _⏰ 10 min - Silent reflection_

- Take the topic you chose before

Define teaching objectives

Describe your goals and intentions as instructor:

- stakeholders

- potential professions

- desired performance and/or competences of course completers

- duration

Structuring curriculum design

Curriculum design benefits from being systematic: structured approaches help to orchestrate and clarify what will be taught, why it will be taught and how; they also afford opportunities to evaluate what does and doesn’t work, and hence what needs to change, ultimately leading to improvements in learning outcomes (Fink 2013, Diamond 2008, Nilson 2016). Several different frameworks have been devised to facilitate the design process, but each is motivated by the same underlying philosophy: to help formulate programmes that promote meaningful and enduring learning. If we’re to understand whether we’ve really achieved this, we must

- determine the purpose of the programme (what needs it addresses, why it’s being developed, what learners will gain from it, why it’s important),

- define the intended LOs, and

- develop assessment and evaluation mechanisms that will allow us to measure whether the programme successfully met its goals.

Comment: Definitions of key termsAssessment: the evaluation or estimation of the nature, quality or ability of someone or something

Not surprisingly, the same principles apply to course design. The process may seem daunting, but for the sake of simplicity, we focus here on one model: i.e., that proposed by Nicholls 2002. Before discussing this further, however, it’s helpful to consider another very important tool used in teaching and learning – Bloom’s taxonomy (Bloom 1956).

Bloom’s taxonomy of cognitive complexity

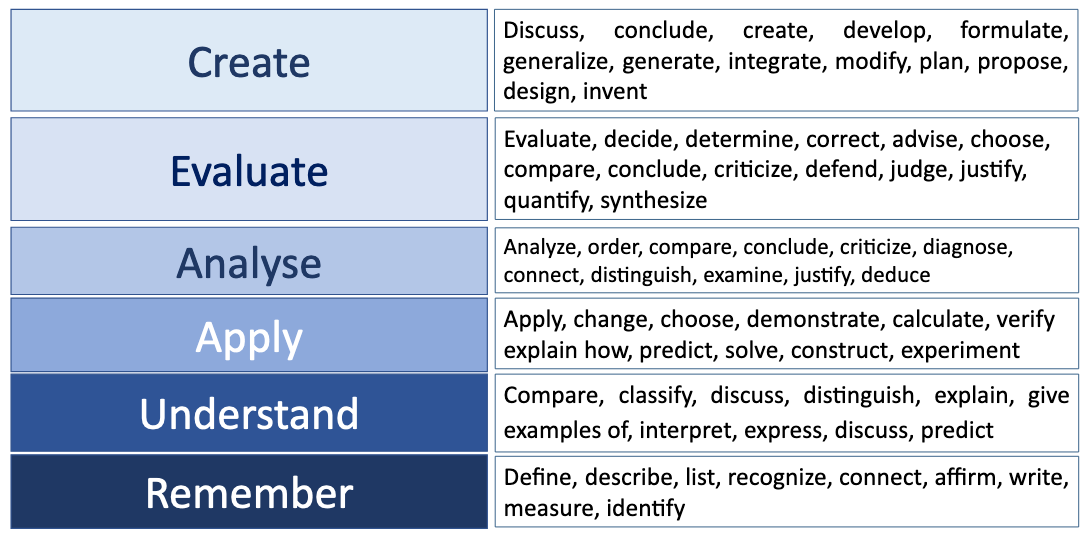

Learning taxonomies are useful tools that can help both to formulate and clarify LOs, and to arrange them on a scale of increasing complexity. Bloom’s taxonomy (Bloom 1956), probably the most easily understood and widely used today, features a six-level hierarchy of cognitive complexity, ranging from Remember (being able to recall facts and basic concepts) to Evaluate being able to defend opinions or decisions), as illustrated in the following figure:

As can be seen from the figure, each Bloom’s level is accompanied by a set of active verbs that express expected, measurable learner behaviours at that level: e.g., achieving the level Understand means to be able to classify, select or explain a piece of information: here, classify, select, explain are observable, assessable behaviours that can be readily encapsulated in coherent LOs.

Typical illustrations of the taxonomy, like that in the figure, depict successive cognitive levels, suggesting that learners must achieve one level before advancing to the next, implying a developmental trajectory from lower- to higher-order cognitive skills. However, this structure should not be regarded as completely rigid; indeed, Anderson et al. 2001 published a revised version in 2001 in which they placed Synthesise (the ability to create new or original work) at the top of the hierarchy in place of Evaluate. Notwithstanding the minutiae, it is perhaps more fruitful to regard the taxonomy as a continuum or spectrum of cognitive levels, where each merges into the next, providing a structured tool to help express measurable, assessable LOs, in which the cognitive levels are made explicit.

The cognitive aspects embodied in LOs are important. Teaching should promote more complex behaviours than just recall or recognition (unless remembering is the intended LO), and push learners to achieve greater cognitive complexity (Nilson 2016, Ambrose et al. 2010, Weinstein et al. 2018, Roediger III and Karpicke 2006, Jensen et al. 2014). This can be done by embedding development in learning activities and materials, and ensuring that LOs reflect the lowest to the highest levels of cognitive complexity realistically achievable on completing those activities or having engaged with those materials (realistic aims are key, especially for short courses: e.g., expecting learners with no prior subject knowledge to achieve the level Evaluate, say in a 1-day course, will guarantee failure and frustration for learners and instructors alike).

Nicholl’s five phases of curriculum design

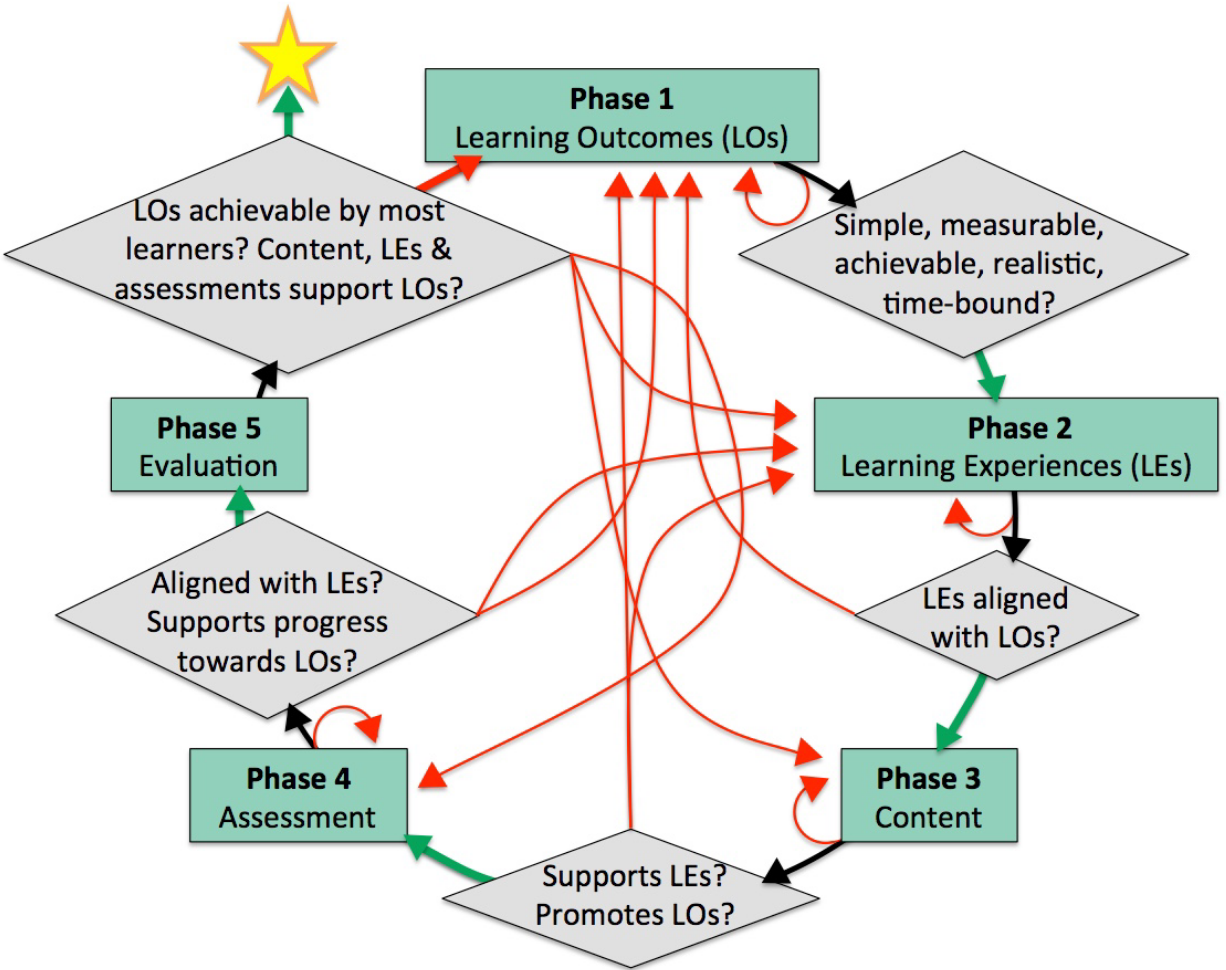

The backdrop for our considerations of course design is Nicholls’ paradigm for curriculum development, illustrated in:

Its five-phase structure has been briefly summarised by Tractenberg et al. 2020, as follows:

- Select LOs;

- Select or develop LEs that will help learners achieve the LOs;

- Select or develop content relevant to LOs;

- Develop assessments to ensure learners progress toward LOs;

- Evaluate the effectiveness of LEs for leading learners to LOs.

As can be seen from Figure 2, the model’s phases are interdependent; all are ultimately dependent on the first – defining LOs. Moreover, the phases are iterative: this means that LOs influence later decisions, but later decisions may also reflect backwards, thereby providing opportunities to check for alignment of each phase to the target LOs (in other words, to ensure that successive phases are mutually consistent with, and supportive of, the LOs). Thus, the role of LOs is pivotal: they must have specific characteristics to function, and support each of the other phases as they do.

Figure 2 illuminates an important feature of the model: that LOs are the starting point, and drive all decision-making. This is just as true for courses as it is for programmes (Diamond 2008). Missing from the model, however, is the dependence of LOs on a hierarchy of cognitive complexity that establishes a developmental trajectory, like that seen in Bloom’s taxonomy. We reflect on this crucial point, and its relevance for course design, in the discussion of each of the five phases below.

Define intended LOs

Comment: What are Learning Outcomes (LOs)?

- Statements expressing which KSAs learners will be able to demonstrate upon completion of a learning experience or a sequence of learning experiences

- What learners will be able to do for the end of a lesson and the teacher can in principle evaluate

Just as for curricula, Phase 1 of course design begins with stating the LOs. To help formulate LOs, it’s important to take a step back and think about what you aim to achieve (i.e., what are your TGs and the KSAs you intend to be achieved?), how you propose to get there, and how you’ll know you succeeded. Messick 1994 encapsulated this process in the form of three succinct questions:

- What KSAs are the targets of instruction (and assessment)?

- What learner actions/behaviours will reveal these KSAs?

- What tasks will elicit these specific actions or behaviours?

These questions were originally posed in 1994 in the context of assessment. Their focus on KSAs – the LOs – thus guides not only the creation of relevant tasks (to reveal the target KSAs) but also the rational development of appropriate assessments: i.e., they provide a framework for, and clarify, what to assess. The questions can thus support all phases of course development, starting with the selection of intended KSAs stated in a set of LOs.

Question: Evaluate LOs

Select a tutorial or a course

Several possibilities:

- Take a course you currently run, plan to run or have run in the past

- Take your favorite tutorial from the GTN Material

- Take the dummy tutorial introducing to data import, that we created for this lesson

- Check the content

Are its intended LOs stated?

for any GTN tutorials, including the dummy one

LOs are always stated on the top for the tutorial, in the Overview box

Writing coherent LOs is challenging: they must contain appropriate (Bloom’s) verbs (Figure 1) that express measurable, observable and assessable actions, accurately describing what successful learners will be able to do – and at what level of cognitive complexity – after instruction.

Various characteristics of, and principles for articulating, LOs have been published (e.g. “Stanford Institutional Research & Decision Support” or for Learning Outcomes Assessment 2016): some of these are listed briefly in the box below.

Comment: Learning outcomes should

- be specific & well defined: LOs should concisely state the specific KSAs that learners should develop as a result of instruction;

- be realistic: LOs must be attainable given the context and resources available for instruction, and consistent with learners’ abilities, developmental levels, prerequisite KSAs, and the time needed vs. time available to achieve them;

- rely on active verbs, phrased in the future tense: LOs should be stated in terms of what successful learners will be able to do as a result of instruction;

- focus on learning products, not the learning process: LOs should not state what instructors will do during instruction, but what learners will be able to do as result of instruction;

- be simple, not compound: LOs shouldn’t include compound statements that join two or more KSAs into one statement;

- be appropriate in number: LOs should be deliverable and assessable within the time available for instruction;

- support assessment that generates actionable evidence: here, actionable means supportive of a decision, or taking some action by a learner or instructor.

Hands-on: Learning outcomes - _⏰ 2 min - Silent working_

- Take the small lesson you selected at the beginning

- Try to jot a few LOs down

Given their detail and complexity, and the importance of aligning the instructional inputs you devise with the outcomes for learners you intend, it can be hard to know where to start. This possibly explains why it may feel easier to begin developing a course by selecting its content rather than first trying to understand its impact on student learning. Nevertheless, ensuring that target LOs meet, or are consistent with, the characteristics outlined in the box below helps to promote better alignment of instructional inputs and learner outcomes.

question Quiz: Evaluate Learning outcomes

Check your knowledge with a quiz!

- Self Study Mode - do the quiz at your own pace, to check your understanding.

- Classroom Mode - do the quiz synchronously with a classroom of students.

“By the end of the course learners will know the Pythagora’s theorem”

This LO is not good:

- How to assess whether learners know the Pythagora’s theorem?

- What do we mean by “knowing” the Pythagorean theorem?

- They are able state it?

- They are able explain it?

- They are able apply it?

- They are able demonstrate it?

- They are able to use it in solving problem?

It would make more sense to ask: “What will learners be able to do to show they know the Pythagorean theorem?”

In short, when defining LOs, the key question to ask is, are they SMART?

- Specific / Simple

- Measurable

- Achievable / Attainable

- Realistic / Relevant

- Time-bound / Transferable

If they do not satisfy this test, they should be revised; only when they meet these criteria is it safe to progress to Phase 2, as shown in Figure 2. SMART LOs will be the roadmap as we plan out instructional strategies, write the content and create assessments.

Hands-on: Create SMART LOs - _⏰ 10 min - Silent working_

- Take the LOs you created

- Consider if your LOs are SMART

Try to revise any of them that do not meet the SMART criteria as follows:

- Select an active verb that can (in principle) be observed & assessed

Complete the sentence

“At the end of this course, learners will be able to…”

If it helps, review the verbs of the Bloom’s taxonomy listed in Figure 1.

It’s important to:

- Focus here on what learners will be able to do at the end of instruction, e.g. will they be able to…

- … describe its content?,

- … explain a concept?,

- *… implement an algorithm?,

- … solve a problem?,

- … evaluate results?

- Avoid verbs open to multiple interpretation

- Use a verb that describes an observable action

Determine how well you have structured your LOs

- Visit the Intended Learning Outcome Advisor

- Paste each LOs into the input box

- Press the SUBMIT button

How well did you do?

- Consider revising your LOs if the Advisor identified any issues

Ultimately, LOs provide the necessary structure and context for decision-making by instructors (and learners), hence their primary role in course design.

Select LEs that will lead to the LOs

Phase 2 involves identifying the most appropriate LEs to lead learners to the intended LOs. It is important to appreciate that different LEs can lead learners to demonstrate different Bloom’s-level accomplishments: e.g.,

- lectures differ from problem-sets – solving problems helps learners to work with, and manipulate, information rather than passively listening to it;

- similarly, lab exercises differ from writing computer programs – writing original code affords learners the opportunity to create something new rather than simply following instructions.

Some example LEs are listed in Table 1, together with the Bloom’s level and the kinds of TG (Teaching Goals) and LO that each may support.

| Learning experience | Highest Bloom’s levels supported | Example TG(s) “This LE will allow me to…“ | Example LO(s) “Learners will be able to…“ |

|---|---|---|---|

| Lecture, webinar | Remember, Comprehend | Inspire learners, ignite learners’ enthusiasm, clarify/explain a concept, provide an overview, give context, summarise content | list the key points of the lecture/webinar, summarise take home message(s) |

| Exercise, practical | Apply, Analyse | Help learners digest course materials, solve typical problems, apply knowledge, show how to do things with appropriate guidance, give an idea of how a tool works | follow a set of instructions or protocol, calculate a set of results or outcomes from a given protocol |

| Flipped class | Apply, Analyse | Teach learners how to formulate questions, help learners to memorise new information & concepts, or analyse & understand course materials | summarise the content material, *ask* appropriate questions |

| Peer instruction | Synthesise, Evaluate | Prepare learners to defend an argument, give learners opportunities to explain things, thereby helping to develop critical thinking & awareness | explain how they solved an exercise, evaluate others’ choices/decisions, diagnose errors in the exercise-solving task |

| Group discussion | Synthesise, Evaluate | Give learners opportunities to practice questioning, develop new ideas & critical thinking | communicate their own ideas, defend their own opinions |

| Group work | Synthesise, Evaluate | Promote collaborative work & peer instruction, provide opportunities for giving/receiving feedback, & digesting course materials | provide feedback on their peers’ work, share ideas, explain the advantages of team-work |

| Problem-solving | Synthesise, Evaluate | Promote learner abilities to identify & evaluate solutions, develop new ideas, make decisions, evaluate decision effectiveness, troubleshoot | diagnose faulty reasoning or an underperforming result, correct errors |

Comment: Definitions of key termsExercise: an activity designed to help learners to mentally put into practice learned skills & knowledge

Flipped class: a learner-centred approach in which students are introduced to new topics prior to class; class time is then used to explore those topics in greater depth via interactive activities

Group discussion: an in-class, learner-centred approach in which students discuss ideas, solve problems &/or answer questions, guided by the instructor

Group work: a learner-centred approach in which students are organised into groups (& perhaps assigned specific roles) & are given tasks to perform collaboratively

Lecture: a didactic approach in which oral presentation is used to describe & explain concepts & to impart facts

Peer-instruction: an interactive, in-class, learner-centred approach in which groups of two or more students briefly discuss a question or assignment given by the instructor

Practical: an activity to put into practice learned skills & knowledge, generally in a lab setting

Problem-solving: a learner-centred approach in which students are required to systematically investigate a problem by building or determining the best strategy to solve it (using what is known to discover what is not known)

Webinar: a lecture delivered online

Having defined SMART LOs in Phase 1, Phase 2 thus hinges on choosing the most appropriate LEs to best lead learners towards them: if LOs include, for example, being able to write a computer program, then the LEs must allow learners to apply the knowledge they have acquired and to demonstrate that they’ve written a piece of functional code: i.e., LEs and LOs must be aligned (if they are not, this can lead directly to a gap between instructional inputs and intended outcomes, which is one reason why course evaluation to detect such misalignments is so crucial). If LEs don’t satisfy this criterion, alternative LEs should be found, or the LOs should be revisited and revised before progressing to Phase 3 (as shown in Figure 2).

Sometimes, it may be necessary to use specific LEs: e.g., if a course is lecture-based, it may not be possible to choose alternatives; or a particular teaching scenario may not allow for an ideal LE. Even if you’re not in a position to select the most appropriate LEs, the LOs must still be consistent with the given LEs; and if those LEs won’t help learners to achieve the LOs, then the LOs should be revised – i.e., LOs are still the most important feature to consider.The key is to determine exactly what specific LEs can contribute to learning or how they will help move learners towards the LOs.

question Quiz: LEs in GTN tutorials

Check your knowledge with a quiz!

- Self Study Mode - do the quiz at your own pace, to check your understanding.

- Classroom Mode - do the quiz synchronously with a classroom of students.

Most of the GTN tutorials are using Practical and Exercises as LEs.

They are most likely to show how to do things and give an idea of how a tool works.

In the dummy tutorial, the LO “Determine the best strategy to get data into Galaxy” may not be achieved with only practical

Select and develop content relevant to the LOs

Select content

With LOs and LEs aligned, Phase 3 involves finding the most appropriate content to support learners to achieve the intended LOs. Regardless of where content is drawn from, what matters is how it supports the LOs.

Comment: Definitions of key termsContent: a specific subject or topic item (e.g., DNA, RNA, proteins, a biochemical pathway, R programming) that is the target of learning

To this end, content selection should be judicious: it shouldn’t try to be all-encompassing (Nilson 2016), but should consider the target Bloom’s levels in the LOs, and the preparation of learners. Once content that’s considered to be the core of a course has been identified, additional auxiliary materials can also be selected, and offered, say, as ‘further reading’. Using LOs to drive content selection in this way thereby both provides focus (avoiding the temptation to squeeze as much as possible into a course to ensure coverage (McKeachie and Svinicki 2013, Lujan and DiCarlo 2006)), and increases the likelihood of accomplishing those LOs.

Sometimes, specific content may be deemed essential (De Veaux et al. 2017). Nevertheless, this must still support the LEs and promote achievement of the LOs; if it doesn’t, then additional content and/or LEs should be considered that will, or the LOs themselves should be revised, to prevent misalignment of instructional inputs and learning outcomes. Overall, the role of LOs here is to help focus on relevant content, and avoid material that’s either non-essential/too broad or too narrow.

If content is, or seems, fixed, it can be difficult to make the shift from content- to LO-driven decisions. In this case, Messick and Bloom’s can be used to make adjustments so that what it means to learn that content or what it looks like to have learned that content can be made concrete and observable. If such fixed content does not lead to observable behaviors that are, or support the achievement of, LOs, then additional content should be considered that will; otherwise, it may be necessary to discuss the feasibility of modifying the content or revising the LOs with those who are able to make changes, to avoid misalignment of intended and actual curricula

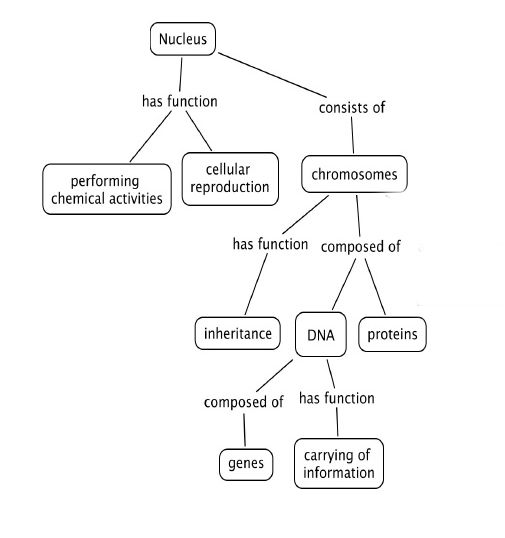

Defining content can be complicated, specially to identify how it can cover the different LOs. Trainers may need to tools to help them defining content, e.g. concept maps.

Concept maps

Concept maps are rough and visual scheme on how the different concepts are linked. They begin with a main idea (or concept) and then branch out to show how that main idea can be broken down into specific topics.

For example, the concepts around the nucleus could be represented using the following concept map:

Why concept maps? Concept maps are graphical tools for organizing and representing knowledge. They include concepts and the relationships between them.

How to use concept maps? Concept maps can be used by trainers to help building content, by breaking training in small pieces (7+/2 concepts) and by helping trainers and helpers to connect things together. A good concept map usually starts with a focused question, a context, and is built with iteration and feedback.

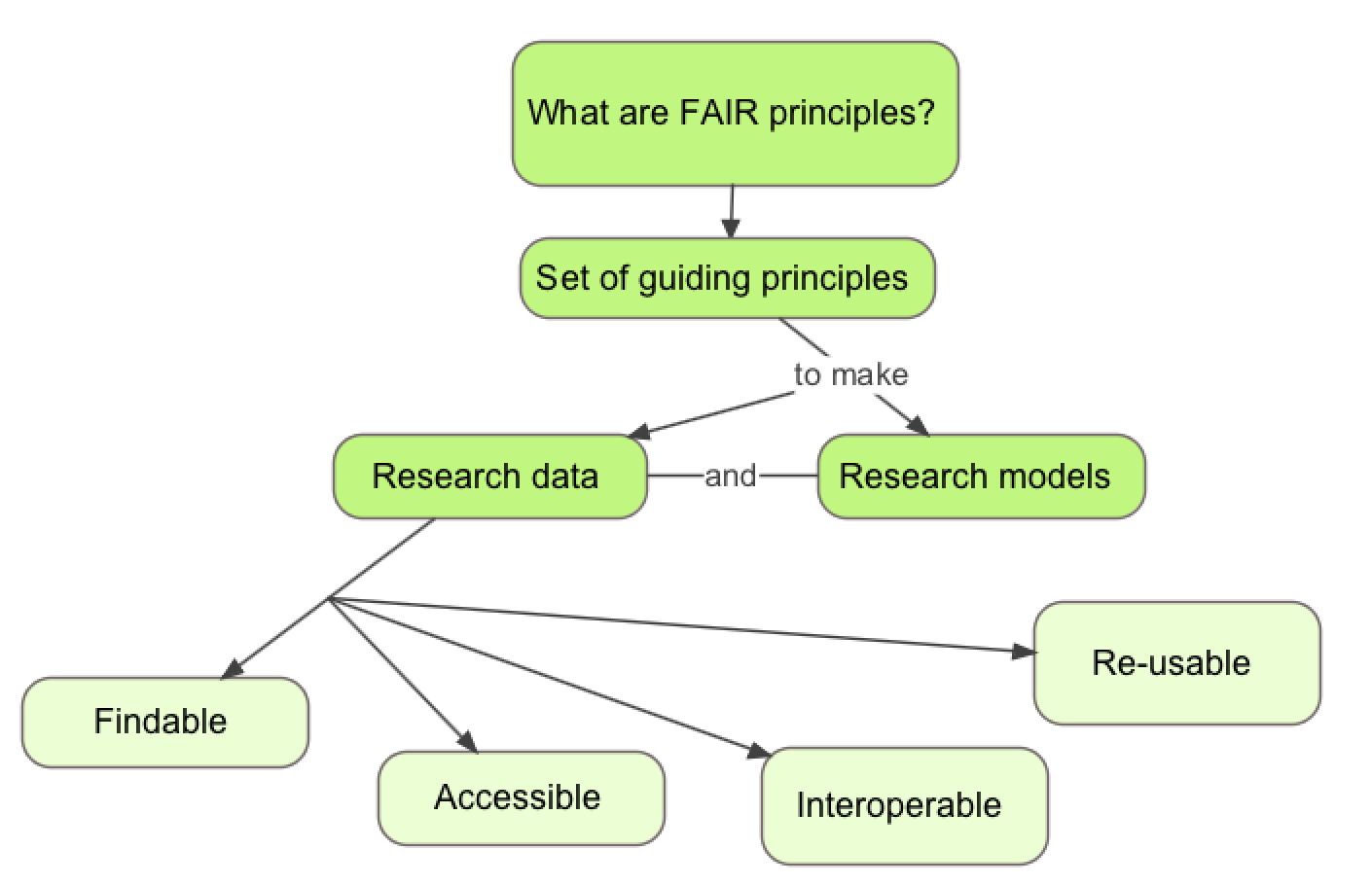

For example, to answer the questions “What are the FAIR principles?”

- we start by listing the keywords that should be discussed: Research data, Research models, Findable, Accessible, Interoperable, Re-usable

- we link them together in a concept map:

Concept maps try to objective but they depend on their creators. Everyone has their own concept map, like the way to go from point A to point B from 2 persons living in the same city.

In curriculum/lesson/session planning, concept maps present key concepts in a highly concise manner. They can take into account the existing knowledge of learners and new concepts This helps in the teaching plan to measure how much can be covered, and the hierarchical organization suggests a sequence to cover material.

Hands-on: Draw a concept map - _⏰ 15 min - Silent working_

- Draw a concept map of your topic of interest

- Start with a question

- Include 7 (+/- 2) concepts

- Include relationships and cross-links between these concepts

- Arrange it in a hierarchical structure with the key concepts on top

- Count the items (concepts and links)

Hands-on: Give / receive feedback on concept maps - _⏰ 10 min - Group of 2 persons_

- Pair with someone

- Check the concept name of your partner

- Write to your partner

- One thing you are confuse/not sure about the map

- One thing you like/it is clear about the map

For more details about concept maps, we recommend you Novak and Cañas 2008 (PDF)

Content

- Content collection: collecting appropriate content to the needs and capabilities of your target audience

- Content reduction: one of the biggest challenges in designing training courses is the reduction of content to the training format. key points!

Hands-on: Producing the content and the training material - _⏰ 15 min_

Prepare the content for your 3 min training

The structure should be something like:

- 0:20 sec - Introduction

- 2:20 sec - Topic

- 0:20 sec - Conclusion

Use your concept map and adapt as needed

Template to help developing plan:

Time Activity Description Goal For example: for a 1h15 session

Time Activity Description Goal 9:00 - 9:15 Warm-up Learners summaries the key points of each session from the previous day and answer questions from the audience. The instructor describes the plan of the day in detail Retrieval from memory, repetition, get prepared for new topics, expose learners 9:15 - 9:25 Lecture Python functions Learning to write a function, about function input and output, and how to call a the function 9:20 - 10:00 Practical activity Two exercises to be solved in pairs on a single computer. After solving the 1st exercise, the “driver” andr”navigator” will swap. 2 learners (1 / exercise) will display their solutions to the audience. Questions and discussion Learners will be able to write and call a function calculating the distance between 2 points in the 3D space and a function taking the base and height of a triangle as input and returning its area 10:00 - 10:15 Wrap-up Group test on functions (match input and output with specific functions: fill gaps in pieces of code). Game: repetition using ball throwing Assess learning. Do we need to work more on functions? Repeat meaning and usage of all Python objects introduced so far

Develop assessments to ensure progression

In any course, learners benefit from having opportunities to show that they’re progressing. Generally, this is done using various types of assessment, including tests, feedback surveys, and so on. Nicholls’ model includes two different types of assessment, to evaluate:

- learning, to detect changes in learner performance during instruction, to identify their strengths, and diagnose their weaknesses – i.e., formative assessment (or feedback). This can inform decisions about how to modify instruction to better promote learning; it can also inform learners about changes they may need to make to improve their learning;

- instructional outcomes, to verify whether learners achieved the stated LOs after instruction – i.e., summative assessment. This can help to inform decisions both about learners (e.g., ranking their performances) and about the course (i.e., whether any of its LOs, LEs, etc. need to be redesigned).

Comment: Definitions of key termsFormative assessment: formal or informal assessments (or feedback) made during learning so that instruction or practice can be better targeted for learners to be able to fully achieve target LOs

Summative assessment: assessments made after a period of instruction in order to monitor whether LOs have been achieved

Formative and summative assessments (Walvoord 2010) are important for determining whether and what learning has occurred. Summative assessment doesn’t generally yield information about learners’ progress: it sums up what learning has been achieved after instruction relative to the intended outcomes (via written tests, practical tasks, or other measurable activities), and gives valuable data about learning attainment at the level of individuals and entire learner cohorts. This can be tricky for short courses, but may be necessary for those that give credits or offer certificates of completion.

Formative assessments are applied throughout a course; planned thoughtfully, they can improve the performance of learners and instructors. Ideally, they should be used often (say, every 15-20 minutes), thereby also yielding opportunities to change pace and refocus learners’ attention. It may be hard to conceive how to integrate such assessments into a course, but they need not be complex or time-consuming (just informative about learning at a given point).

The most effective way to test student understanding is to do so in class: it’s important to seize the moment, and deal with potential misunderstandings as soon as they arise. These in-class tips may be helpful:

- reflection: towards the end of a training session, ask learners to reflect on, and write down, a list of new concepts and skills they’ve learned. Ask them to consider how they’d apply these concepts or skills in a practical setting;

- agreed signals: gauge learners’ satisfaction with a training session by asking them to use agreed signals (e.g., raising coloured post-it notes to indicate that the pace is too fast/ slow, etc.). This engages all learners, and allows you to check their confi- dence with the content and its delivery, even in large groups;

- 3-2-1: at the end of a training session, ask learners to note 3 things they learned, 2 things they want to know more about, and 1 question they have. This stimulates reflection on the session, and helps to process their learning;

- misconception check: present some common or predictable misconceptions about a concept you’ve covered. Ask learners whether they agree or disagree, and to explain why;

- diagnostic questions/questionnaires (which may be anonymous): ask learners to note one thing they didn’t understand or that they missed, and one thing that was very clear to them, or make them complete a multiple choice quiz, then display and discuss the answers with them.

These, and many other simple assessments, may be found in Briggs’ online list of 21 ways to check for student understanding.

If formative assessments are used frequently, and lead to specific decisions by learners and instructors, then instruction (or learner preparation) can be modified to better develop the target LOs (Dylan and Thompson 2008, Trumbull and Lash 2013). Such assessments can be designed to anticipate, and identify which learners are experiencing, common misunderstandings, and gauge their readiness to move ahead; they can also help learners to identify their own strengths and weaknesses by encouraging reflection on what they do/don’t know or are/aren’t confident about, honing their abilities to self-assess (Ambrose et al. 2010). Furthermore, ideas for how to address any issues they’ve found, or for further learning, can be built in (e.g., “if you chose option C, you might want to re-read the handout”); formative assessments can therefore also support self-instruction.

Using actionable formative and summative assessment can help to ensure that LOs, and progress towards them, are explicitly supported. LOs clarify what specifically needs to be assessed and why.

question Quiz: Evaluate assessment in GTN tutorials

Check your knowledge with a quiz!

- Self Study Mode - do the quiz at your own pace, to check your understanding.

- Classroom Mode - do the quiz synchronously with a classroom of students.

Hands-on: Assessment in mini-training - _⏰ 5 min_

- Take the content of your 3 min training

- Analyse the flow of the lesson, identify points where you could introduce formative-feedback sessions

- For each portion of the lesson between two sets of feedback, imagine (& write down) a quick activity – an exercise, a question, a reflection – that learners could carry out that would help you understand whether they’re following the lesson & that learning is occurring, or whether they’re lagging behind or are lost.

Evaluate course effectiveness

The final step is to perform an actionable evaluation by collecting qualitative and quantitative course data to assess its effectiveness in leading learners to the stated LOs. Summative assessments can be useful here: e.g., if they reveal uniformly low levels of achievement, it may indicate that future revisions are needed to ensure that LEs, assessments and LOs are aligned; it could also flag problems with the assessment – it’s vital to understand whether performance reflects student learning levels or issues with the assessment itself (poorly worded test questions, ambiguous response options, etc.), and, if not, to take steps to ensure that it does.

Even if a full quantitative course evaluation isn’t possible, it may still be possible to solicit actionable evidence of its impact via short- or long-term feedback surveys. It’s tempting to use completion rates or learner satisfaction as proxies for success; however, although simple to collate, these outcomes aren’t informative about learners’ growth or course effectiveness. Satisfaction surveys often use pre- and post-course questionnaires (Jordan et al. 2018, Gurwitz et al. 2020) to collect demographic details, and solicit learners’ self-evaluations and reactions to a course. Reviewing learners’ perceptions can help to identify whether the conditions for learning were present, but alone will not shed light on whether the intended LOs were achieved: learner perceptions may be affected by factors unrelated to course effectiveness, and their self-assessments may be biased (e.g., less-skilled learners notoriously overestimate their abilities – the Dunning-Kruger effect (Kruger and Dunning 1999)).

There are many evaluation methods, each with advantages and disadvantages; all concur that multiple features need to be considered. One framework collates learner reactions, their actual learning, changes in their behaviour, and the impact of the course on their organisation.

Evaluation methods provide systematic frameworks for analysing the effectiveness of training courses. The first approach was introduced by Raymond Katzell in 1956 and later popularised by Kirkpatrick (Kirkpatrick 1979, Kirkpatrick and Kirkpatrick 2006). The Kirkpatrick-Katzell method proposes a four-level strategy:

- Reaction – what do learners feel about the training?

- Learning – what did learners retain from the training?

- Behaviour – did learners put their learning into practice on-the-job (did their working behaviour change)?

- Results – did their behavioural changes have an overall impact on their organisation (e.g., greater productivity)?

Surveying each level helps collect qualitative and quantitative data to evaluate training effectiveness. Note: this isn’t a real taxonomy, as there’s no evidence that outcomes at successive levels are linked (e.g., no correlation has been found between the reaction and learning stages Gessler 2009). However, if level 2 results indicate that learning didn’t occur, those from level 1 may identify aspects of a course that disappointed learners and help understand what should be improved. Many other approaches to training evaluation have been developed, each focusing on slightly different levels or stages.

Such features can be explicitly targeted in short- or long-term surveys: e.g., end-of-course, summative quizzes can be used to test achievement of intended LOs; longer-term questions (say, 6-12 months after a course) can focus on the extent to which learners have put their acquired KSAs into working practice; and so on. Overall, it’s important to choose an appropriate evaluation method for your circumstances, and, alongside learner reactions to the course, for your evaluation to consider what results you expected to achieve, whether the LOs were achieved, and whether learners’ on-the-job practices changed.

Actionable evaluation is essential for identifying aspects of a course that may benefit from intervention, leading to concrete decisions about what needs to be remediated and why (Worthen et al. 1997). Designing meaningful evaluations requires thought; in some cases, it may help to appoint independent evaluators or advisory boards, as external reviewers can give objective appraisals, and may also help to frame the course against national/international standards. Ultimately, the evaluation should identify what works in a course, and what needs remediation, to better support the achievement of the intended LOs.

question Quiz: Evaluate effectiveness in GTN tutorials

Check your knowledge with a quiz!

- Self Study Mode - do the quiz at your own pace, to check your understanding.

- Classroom Mode - do the quiz synchronously with a classroom of students.

Tutorials are evaluated using

- Embeded feedback forms at the

- Collecting feedback at the end of courses

Testing the mini-training

Hands-on: Delivery planning - _⏰ 10 min - Silent working_

- Think if you want to make your training interactive

- Think whether you need or want to use a visual support (images)

- Think whether you need to distribute material in advance to the audience

- Prepare for your choices

- Be creative!

Hands-on: Deliver mini-training and Give / receive feedback on its delivery - _⏰ 15 min - Groups of 2 persons_

Deliver each one your 3 minute session to others with 1 person noting down feedback in real-time

- Listen actively and attentively

- Ask for clarification if you are confused

- Do not interrupt one another

Collect constructive feedback on content and presentation: positive and negative feedback using the following matrix as template:

Content Presentation - Challenge one another, but do so respectfully

- Critique ideas, not people

- Do not offer opinions without supporting evidence

- Take responsibility for the quality of the discussion

- Build on one another’s comments; work toward shared understanding.

- Do not monopolise discussion.

- Speak from your own experience, without generalizing.

- If you are offended by anything said during discussion, acknowledge it immediately.

- Describe your own feedback on your delivery

- Provide feedback to the presenter

- Share with the group any insights/thoughts/comments to share from your breakout room

Training material: sharing and making re-use possible

When developing a course, it’s helpful to document the design process, including details of how it was conceptualised, the assumptions and decisions made along the way, the assessment criteria, etc., and to share that documentation with instructors and learners (this can be done via community mailing lists, through blog posts, collaborative repositories, social channels, using GitHub, Slack, etc.). By way of example, The Carpentries provide instructor note for most of their courses. These are collectively-written documents that reflect on the strengths and weaknesses of the course design (and its materials), what did/didn’t work, suggested improvements, tips for teaching, challenges encountered, learner feedback, and indications of where alignment of LOs/LEs/content/assessments failed, and why.

Question: Resources in the Galaxy Training NetworkWhat are the provided resources by the Galaxy Training Network to document training resources?

- Gitter / Matrix channel

- GitHub Pull Request

- Details box in tutorials

- Feedback page

- Workshop Instructor Feedback

Best practices like this help course designers and communities of trainers to understand what was intended, what was done, and why those might be different, if they are. This is particularly valuable for courses whose materials are not developed by its instructors. Such documentation can thus facilitate reflection and promote good practice, and can help new instructors prepare to deliver the course. If made available to learners, it can help them to understand what they can expect from a course, and make informed decisions about whether it will help them to achieve their learning goals; it may also help them to better gauge their performance, and to identify what will help them perform better – it may therefore also improve learning outcomes.

As data and models should be FAIR (Findable, Accessible, Interoperable & Re-usable), training materials (slides, exercises, datasets, etc) should also be FAIR

- Findable - can be searched and found by the trainers community

- Accessible - can be read/downloaded by other trainers

- Interoperable - can be understood clearly in the context of the original course

- Re-usable - can be used by other trainers

How to make training material FAIR? You can follow the recommendations from Garcia et al. 2020:

Question: Is GTN material FAIR?

Look at the any GTN tutorial (e.g., the dummy tutorial)

Is this training material FAIR ? If so, how?

This infrastructure has been developed in accordance with the FAIR (Findable, Accessible, Interoperable, Reusable) principles for training materials Garcia et al. 2020. Following these principles enables trainers and trainees to find, reuse, adapt, and improve the available tutorials.

10 Simple Rules Implementation in GTN framework Plan to share your training materials online Online training material portfolio, managed via a public GitHub repository Improve findability of your training materials by properly describing them Rich metadata associated with each tutorial that are visible and accessible via schema.org on each tutorial webpage. Give your training materials a unique identity URL persistency with redirection in case of renaming of tutorials. Data used for tutorials stored on Zenodo and associated with a Digital Object Identifiers (DOI) Register your training materials online Tutorials automatically registered on TeSS, the ELIXIR’s Training e-Support System If appropriate, define access rules for your training materials Online and free to use without registration Use an interoperable format for your training materials Content of the tutorials and slides written in Markdown. Metadata associated with tutorials stored in YAML, and workflows in JSON. All of this metadata is available from the GTN’s API Make your training materials (re-)usable for trainers Online. Rich metadata associated with each tutorial: title, contributor details, license, description, learning outcomes, audience, requirements, tags/keywords, duration, date of last revision. Strong technical support for each tutorial: workflow, data on Zenodo and also available as data libraries on UseGalaxy.*, tools installable via the Galaxy Tool Shed, list of possible Galaxy instances with the needed tools. Make your training materials (re-)usable for trainees Online and easy to follow hands-on tutorials. Rich metadata with “Specific, Measurable, Attainable, Realistic and Time bound” (SMART) learning outcomes following Bloom’s taxonomy. Requirements and follow-up tutorials to build learning path. List of Galaxy instances offering needed tools, data on Zenodo and also available as data libraries on UseGalaxy.*. Support chat embedded in tutorial pages. Make your training materials contribution friendly and citable Open and collaborative infrastructure with contribution guidelines, a CONTRIBUTING file and a chat. Details to cite tutorials and give credit to contributors available at the end of each tutorial. Keep your training materials up-to-date Open, collaborative and transparent peer-review and curation process. Short time between updates.

Creating and sharing documentation with instructors and learners fosters the development of communities of best practice, and can support both learning and the success of a course.

Conclusion

Course design is prefaced by determining the purpose of the programme, analysing the context in which the course will be delivered and who will benefit. Fundamental to this process is to identify the needs the course will address, its target audience, and the prerequisite KSAs that learners must have in order to profit most from the course. Identifying the target audience and learner prerequisites helps to define criteria for selecting participants, should a limit be needed to maximise course effectiveness.

Once such ‘situational analysis’ has been completed, the starting point, and pivotal reference for all subsequent stages of the course-design process, is to articulate SMART LOs. As part of this process, Bloom’s taxonomy is useful for defining LOs that are measurable, and whose cognitive complexity increases along a developmental trajectory; and Messick’s questions help both to select KSAs and LOs, and to ensure alignment of instruction and assessment. Crucially, informed choices need to be made about the LEs (and the content they use) that best align with the goal of achieving specific LOs in the time available. General topic areas (life sciences, computer science, data science, etc.) may provide the overarching framework, but your goals for learners should drive how content is selected, taught and assessed. In terms of assessment approaches, formative assessment is generally more relevant for short courses, but summative assessment may be necessary for accredited courses. Ultimately, it’s important to understand what each type of assessment contributes to the course-design process and to build your practice to maximise the effectiveness of each.

Evaluating course effectiveness should be the final stage of a robust design process, and the first step towards course re-design and improvement, should the course be delivered regularly. To do this rigorously, it’s helpful to employ a multi-level evaluation strategy, in which learner satisfaction is just one strand (used alone, learner satisfaction is not a reliable metric of success).

Course design requires thought and time. Successful courses support learners as they develop from entry-level performance to the minimum performance level for achieving the target LOs. If designed specifically to support learners and LOs, and evaluated against that objective, the instruction that’s delivered and learning that’s intended are more likely to match. This can’t guarantee success for all learners, but does create the optimal circumstances for success.

Structured approaches benefit course design by leveraging what’s already known about learning, and providing a framework for decision-making. The process can be challenging, but investing in it is likely to pay dividends. Ultimately, everything in the design should lead to, and support development of, the LOs that learners should possess, and be able to demonstrate, on completion of a course. For ease of reference, the approach outlined here can be distilled into the simple set of recommendations summarised:

| N | Recommendation | Benefits |

|---|---|---|

| 1 | Follow a structured paradigm for course design. | Leverages what’s known about education & learning; provides a framework for decision-making. |

| 2 | Focus on LOs first, to inform all other course-design decisions | LOs provide context for decision-making by instructors & learners. |

| 2.1 | Leverage LOs to determine appropriate LEs | LOs help to choose LEs that support learners to achieve the LOs. |

| 2.2 | Leverage LOs to select content that promotes achievement of the LOs. | LOs help to focus on relevant content, & to avoid material that is non-essential &/or too narrow |

| 2.3 | Assess learners’ progress towards LOs & the achievement of LOs using formative & summative assessment, respectively | LOs clarify what specifically needs to be assessed & why. |

| 3 | Plan & execute an actionable course evaluation. | Helps identify what works & what to remedy in the course to better support achievement of LOs. |

| 4 | Document & share the course features with learners. | Helps to support learning & promotes success of the course. |

Key points

Course development is a multi-step, iterative process

The first step is to identify intended (SMART) LOs

Identification of key content only becomes relevant after LOs & suitable LEs have been identified

Bloom’s taxonomy can facilitate the articulation of measurable LOs, & support the development of cognitive complexity through them

Messick’s questions help to align KSAs, instruction & assessment

For successful outcomes, learners & the course must be evaluated (it isn’t sufficient simply to note ‘completers’ or learner satisfaction)

A successful course guides learners from entry-level performance to the minimum performance level for achieving its target LOs

In a course designed to support learners & LOs, the instruction that’s delivered & learning that’s intended are more likely to align, thereby providing the optimal circumstances for learner success.

Frequently Asked Questions

Have questions about this tutorial? Check out the FAQ page for the Contributing to the Galaxy Training Material topic to see if your question is listed there. If not, please ask your question on the GTN Gitter Channel or the Galaxy Help ForumQuizzes

Check your understanding with these quizzes

Evaluate assessment in GTN tutorials

- Self Study Mode - do the quiz at your own pace, to check your understanding.

- Classroom Mode - do the quiz synchronously with a classroom of students.

LEs in GTN tutorials

- Self Study Mode - do the quiz at your own pace, to check your understanding.

- Classroom Mode - do the quiz synchronously with a classroom of students.

Evaluate Learning outcomes

- Self Study Mode - do the quiz at your own pace, to check your understanding.

- Classroom Mode - do the quiz synchronously with a classroom of students.

Evaluate effectiveness in GTN tutorials

- Self Study Mode - do the quiz at your own pace, to check your understanding.

- Classroom Mode - do the quiz synchronously with a classroom of students.

References

- UNESCO IBE Glossary of Curriculum-Related Terminology. Accessed 2022-03-02. http://www.ibe.unesco.org/en/glossary-curriculum-terminology/a/attained-curriculum

- The glossary of Education Reform. Accessed 2022-03-02. http://www.edglossary.org/hidden-curriculum

- Stanford Institutional Research & Decision Support. Accessed 2022-03-02. http://irds.stanford.edu/sites/g/files/sbiybj10071/f/clo.pdf

- Bloom, B. S., 1956 Taxonomy of educational objectives: The classification of educational goals. Cognitive domain.

- Kirkpatrick, D. L., 1979 Techniques for evaluating training programs. Training and development journal.

- Messick, S., 1994 The interplay of evidence and consequences in the validation of performance assessments. Educational researcher 23: 13–23.

- Worthen, B. R., J. R. Sanders, and J. L. Fitzpatrick, 1997 Program evaluation. Alternative approaches and practical guidelines 2:

- Kruger, J., and D. Dunning, 1999 Unskilled and unaware of it: how difficulties in recognizing one’s own incompetence lead to inflated self-assessments. Journal of personality and social psychology 77: 1121.

- Anderson, L. W., D. R. Krathwohl, P. W. Airasian, K. A. Cruikshank, R. E. Mayer et al., 2001 A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives, abridged edition. White Plains, NY: Longman 5:

- Nicholls, G., 2002 Developing teaching and learning in higher education. Routledge.

- Kirkpatrick, D., and J. Kirkpatrick, 2006 Evaluating training programs: The four levels. Berrett-Koehler Publishers.

- Lujan, H. L., and S. E. DiCarlo, 2006 Too much teaching, not enough learning: what is the solution? Advances in Physiology Education 30: 17–22.

- Roediger III, H. L., and J. D. Karpicke, 2006 The power of testing memory: Basic research and implications for educational practice. Perspectives on psychological science 1: 181–210.

- Diamond, R. M., 2008 Designing and assessing courses and curricula: A practical guide. John Wiley & Sons.

- Dylan, W., and M. Thompson, 2008 Integrating assessment with instruction: what will it take to make it work. The future of assessment: shaping teaching and learning. New York: Lawrence Erlbaum Associates 9781315086545–3.

- Novak, J. D., and A. J. Cañas, 2008 The theory underlying concept maps and how to construct and use them.

- Vocational Training, E. C. for the Development of, 2008 Terminology of European education and training policy: A selection of 100 key terms. Office for Official Publ. of the Europ. Communities.

- Gessler, M., 2009 The correlation of participant satisfaction, learning success and learning transfer: an empirical investigation of correlation assumptions in Kirkpatrick’s four-level model. International Journal of Management in Education 3: 346–358.

- Ambrose, S. A., M. W. Bridges, M. DiPietro, M. C. Lovett, and M. K. Norman, 2010 How learning works: Seven research-based principles for smart teaching. John Wiley & Sons.

- Walvoord, B. E., 2010 Assessment clear and simple: A practical guide for institutions, departments, and general education. John Wiley & Sons.

- Fink, L. D., 2013 Creating significant learning experiences: An integrated approach to designing college courses. John Wiley & Sons.

- McKeachie, W., and M. Svinicki, 2013 McKeachie’s teaching tips. Cengage Learning.

- Trumbull, E., and A. Lash, 2013 Understanding formative assessment. Insights form learning theory and measurement theory. San Francisco: WestEd 1–20.

- Jensen, J. L., M. A. McDaniel, S. M. Woodard, and T. A. Kummer, 2014 Teaching to the test… or testing to teach: Exams requiring higher order thinking skills encourage greater conceptual understanding. Educational Psychology Review 26: 307–329.

- Nilson, L. B., 2016 Teaching at its best: A research-based resource for college instructors. John Wiley & Sons.

- Learning Outcomes Assessment, N. I. for, 2016 Higher Education Quality: Why Documenting Learning Matters.

- De Veaux, R. D., M. Agarwal, M. Averett, B. S. Baumer, A. Bray et al., 2017 Curriculum guidelines for undergraduate programs in data science. Annual Review of Statistics and Its Application 4: 15–30.

- Jordan, K., F. Michonneau, and B. Weaver, 2018 Analysis of Software and Data Carpentry’s Pre- and Post-Workshop Surveys. 10.5281/zenodo.1325464

- Weinstein, Y., M. Sumeracki, and O. Caviglioli, 2018 Understanding how we learn: A visual guide. Routledge.

- Wiegers, L., and C. W. G. van Gelder, 2019 Illustration for "Ten simple rules for making training materials FAIR". 10.5281/ZENODO.3593257 https://zenodo.org/record/3593257

- Garcia, L., B. Batut, M. L. Burke, M. Kuzak, F. Psomopoulos et al., 2020 Ten simple rules for making training materials FAIR.

- Garcia, L., B. Batut, M. L. Burke, M. Kuzak, F. Psomopoulos et al., 2020 Ten simple rules for making training materials FAIR (S. Markel, Ed.). PLOS Computational Biology 16: e1007854. 10.1371/journal.pcbi.1007854

- Gurwitz, K. T., P. Singh Gaur, L. J. Bellis, L. Larcombe, E. Alloza et al., 2020 A framework to assess the quality and impact of bioinformatics training across ELIXIR. PLoS computational biology 16: e1007976.

- Tractenberg, R. E., J. M. Lindvall, T. Attwood, and A. Via, 2020 Guidelines for curriculum and course development in higher education and training.

- Via, A., P. M. Palagi, J. M. Lindvall, R. E. Tractenberg, T. K. Attwood et al., 2020 Course design: Considerations for trainers–a Professional Guide. F1000Research 9:

Feedback

Did you use this material as an instructor? Feel free to give us feedback on how it went.

Did you use this material as a learner or student? Click the form below to leave feedback.

Citing this Tutorial

-

Bérénice Batut , 2022 Design and plan session, course, materials (Galaxy Training Materials). https://training.galaxyproject.org/training-material/topics/contributing/tutorials/design/tutorial.html Online; accessed TODAY

- Batut et al., 2018 Community-Driven Data Analysis Training for Biology Cell Systems 10.1016/j.cels.2018.05.012

@misc{contributing-design, author = "Bérénice Batut and Fotis E. Psomopoulos and Allegra Via and Patricia Palagi and ELIXIR Goblet Train the Trainers", title = "Design and plan session, course, materials (Galaxy Training Materials)", year = "2022", month = "10", day = "18" url = "\url{https://training.galaxyproject.org/training-material/topics/contributing/tutorials/design/tutorial.html}", note = "[Online; accessed TODAY]" } @article{Batut_2018, doi = {10.1016/j.cels.2018.05.012}, url = {https://doi.org/10.1016%2Fj.cels.2018.05.012}, year = 2018, month = {jun}, publisher = {Elsevier {BV}}, volume = {6}, number = {6}, pages = {752--758.e1}, author = {B{\'{e}}r{\'{e}}nice Batut and Saskia Hiltemann and Andrea Bagnacani and Dannon Baker and Vivek Bhardwaj and Clemens Blank and Anthony Bretaudeau and Loraine Brillet-Gu{\'{e}}guen and Martin {\v{C}}ech and John Chilton and Dave Clements and Olivia Doppelt-Azeroual and Anika Erxleben and Mallory Ann Freeberg and Simon Gladman and Youri Hoogstrate and Hans-Rudolf Hotz and Torsten Houwaart and Pratik Jagtap and Delphine Larivi{\`{e}}re and Gildas Le Corguill{\'{e}} and Thomas Manke and Fabien Mareuil and Fidel Ram{\'{\i}}rez and Devon Ryan and Florian Christoph Sigloch and Nicola Soranzo and Joachim Wolff and Pavankumar Videm and Markus Wolfien and Aisanjiang Wubuli and Dilmurat Yusuf and James Taylor and Rolf Backofen and Anton Nekrutenko and Björn Grüning}, title = {Community-Driven Data Analysis Training for Biology}, journal = {Cell Systems} }

Funding

These individuals or organisations provided funding support for the development of this resource

This project (2020-1-NL01-KA203-064717) is funded with the support of the Erasmus+ programme of the European Union. Their funding has supported a large number of tutorials within the GTN across a wide array of topics.

Developing GTN training materialThis tutorial is part of a series to develop GTN training material, feel free to also look at:

- Overview of the Galaxy Training Material

- Adding auto-generated video to your slides

- Adding Quizzes to your Tutorial

- Contributing with GitHub via command-line

- Contributing with GitHub via its interface

- Creating a new tutorial

- Creating content in Markdown

- Creating Interactive Galaxy Tours

- Creating Slides

- Design and plan session, course, materials

- Generating PDF artefacts of the website

- GTN Metadata

- Including a new topic

- Principles of learning and how they apply to training and teaching

- Running the GTN website locally

- Running the GTN website online using GitPod

- Teaching Python

- Tools, Data, and Workflows for tutorials

- Updating diffs in admin training

Questions:

Questions: